Over the previous few months, AI chatbots have exploded in reputation off the surging success of OpenAI’s revolutionary ChatGPT—which, amazingly, solely burst onto the scene around December. But when Microsoft seized the chance to hitch its wagon to OpenAI’s rising star for a steep $10 billion dollars, it selected to take action by introducing a GPT-4-powered chatbot beneath the guise of Bing, its swell-but-also-ran search engine, in a bid to upend Google’s search dominance. Google rapidly adopted go well with with its personal homegrown Bard AI and unleashed plans to put AI answers before traditional search results, an completely monumental alteration to some of the vital locations on the Internet.

Both are touted as experiments. And these “AI chatbots” are really wondrous developments—I’ve spent many nights with my children joyously creating fantastic stuff-of-your-dreams artwork with Bing Chat’s Dall-E integration and prompting sick raps about wizards who assume lizards are the supply of all magic, and seeing them come to life in mere moments with these improbable instruments. I really like ‘em.

But Microsoft and Google’s advertising and marketing received it mistaken. AI chatbots like ChatGPT, Bing Chat, and Google Bard shouldn’t be lumped in with search engines like google and yahoo by any means, a lot much less energy them. They’re extra like these crypto bros clogging up the feedback in Elon Musk’s terrible new Twitter, loudly and confidently braying truthy-sounding statements that in actuality are sometimes stuffed with absolute bullshit.

These so-called “AI chatbots” do a improbable job of synthesizing info and offering entertaining, oft-accurate particulars about no matter you question. But beneath the hood, they’re really large language models (LLMs) skilled on billions and even trillions of factors of knowledge—textual content—that they study from with a purpose to anticipate which phrases ought to come subsequent primarily based off your question. AI chatbots aren’t clever in any respect. They draw on patterns of phrase affiliation to generate outcomes that sound believable on your question, then state them definitively with no thought of whether or not or not these strung-together phrases are literally true. Heck, Google’s AI can’t even get facts about Google products correct.

I don’t know who coined the time period initially, however the memes are proper: These chatbots are basically autocorrect on steroids, not dependable sources of knowledge like the major search engines they’re being glommed onto, regardless of the implication of belief that affiliation gives.

They’re bullshit generators. They’re crypto bros.

Further studying: ChatGPT vs. Bing vs. Bard: Which AI is best?

AI chatbots say the darndest issues

Mark Hachman/IDG

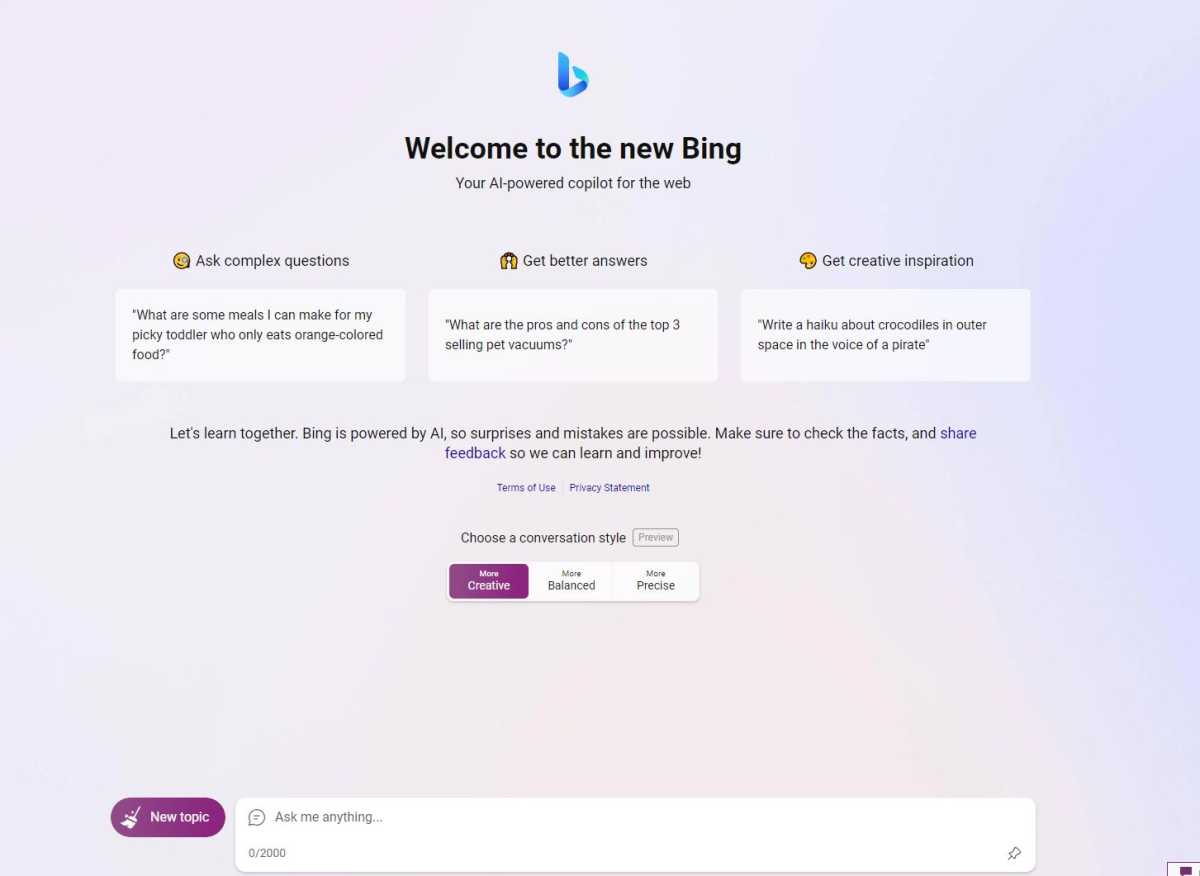

The indicators had been there instantly. Beyond all of the experiment speak, Microsoft and Google had been each certain to emphasise that these LLMs generally generate inaccurate outcomes (“hallucinating,” in AI technospeak). “Bing is powered by AI, so surprises and mistakes are possible,” Microsoft’s disclaimer states. “Make sure to check the facts, and share feedback so we can learn and improve!” That was pushed house when journalists found embarrassing inaccuracies within the glitzy launch displays for Bard and Bing Chat alike.

Those falsehoods suck while you’re utilizing Bing and, you already know, Google—the world’s greatest two search engines like google and yahoo. But conflating search engines like google and yahoo with giant language fashions has even deeper implications, as pushed house by a latest Washington Post report chronicling how OpenAI’s ChatGPT “invented a sexual harassment scandal and named a real law prof as the accused,” because the headline aptly summarized.

It’s precisely what it feels like. But it’s a lot worse due to how this hallucinated “scandal” was found.

Yes, the Bing Chat interface says ‘surprises and mistakes are possible,’ however you enter it by way of the Bing search engine and this design insinuates you’ll get ‘better answers’ to even complicated questions regardless of the tendency for AI hallucinations to get issues mistaken.

Brad Chacos/IDG

You ought to go learn the article. It’s each nice and terrifying. Essentially, regulation professor John Turley was contacted by a fellow lawyer who requested ChatGPT to generate a listing of regulation students responsible of sexual harassment. Turley’s identify was on the checklist, full with a quotation of a Washington Post article. But Turley hasn’t been accused of sexual harassment, and that Post article doesn’t exist. The giant language mannequin hallucinated it, possible drawing off Turley’s file of offering press interviews on regulation topics to publications just like the Post.

“It was quite chilling,” Turley advised The Post. “An allegation of this kind is incredibly harmful.”

You’re damned proper it’s. An allegation like that may wreck somebody’s profession, particularly since Microsoft’s Bing Chat AI rapidly began spouting comparable allegations with Turley’s identify within the information. “Now Bing is additionally claiming Turley was accused of sexually harassing a pupil on a category journey in 2018,” the Post’s Will Oremus tweeted. “It cites as a source for this claim Turley’s own USA Today op-ed about the false claim by ChatGPT, along with several other aggregations of his op-ed.”

I’d be livid—and furiously suing each firm concerned within the slanderous claims, made beneath the company banners of OpenAI and Microsoft. Funnily sufficient, an Australian mayor threatened just that across the similar time the Post report revealed. “Regional Australian mayor [Brian Hood] said he may sue OpenAI if it does not correct ChatGPT’s false claims that he had served time in prison for bribery, in what would be the first defamation lawsuit against the automated text service,” Reuters reported.

OpenAI’s ChatGPT is catching the brunt of those lawsuits, probably as a result of it’s on the forefront of “AI chatbots” and was the fastest-adopted expertise ever. (Spitting out libelous, hallucinated claims doesn’t assist.) But Microsoft and Google are inflicting simply as a lot hurt by associating chatbots with search engines like google and yahoo. They’re too inaccurate for that, at the least at this stage.

Turley and Hood’s examples could also be excessive, however in the event you spend any period of time enjoying round with these chatbots, you’re sure to stumble into extra insidious inaccuracies, nonetheless acknowledged with full confidence. Bing, for instance, misgendered my daughter after I requested about her, and after I had it craft a personalised resume from my LinkedIn profile, it received so much appropriate, but in addition hallucinated abilities and former employers wholecloth. That could possibly be devastating to your job prospects in the event you aren’t paying shut consideration. Again, Bard’s reveal demonstration included apparent falsehoods in regards to the James Webb area telescope that astronomers recognized immediately. Using these supposedly search engine-adjacent instruments for analysis may wreck your child’s faculty grades.

It didn’t need to be this fashion

AI chatbots have a giant microphone and all of the boisterous, misplaced confidence of that dude all the time yelling about sports activities and politics on the bar.

Bing Chat / Brad Chacos/ IDG

The hallucinations generally spit out by these AI instruments aren’t as painful in additional inventive endeavors. AI art generators rock, and Microsoft’s killer-looking Office AI enhancements—which may create full PowerPoint displays out of reference paperwork you cite, and extra—appear poised to convey radical enhancements to desk drones like yours really. But these duties don’t have the strict accuracy expectations that include search engines like google and yahoo.

It didn’t need to be this fashion. Microsoft and Google’s advertising and marketing really dropped the ball right here by associating giant language fashions with search engines like google and yahoo within the eyes of the general public, and I hope it doesn’t wind up completely poisoning the nicely of notion. These are improbable instruments.

I’ll finish this piece with a tweet from Steven Sinofsky, who was replying to commentary about severely mistaken ChatGPT hallucinations inflicting complications for an inaccurately cited researcher. Sinofsky is an investor who lead Microsoft Office and Windows 7 to glory again within the day, so he is aware of what he’s speaking about.

“Imagine a world where this was called ‘Creative Writer’ and not ‘Search’ or ‘Ask anything about the world,’” he stated. “This is just a branding fiasco right now. Maybe in 10 years of progress, many more technology layers, and so on it will come to be search.”

For now, nonetheless, AI chatbots are crypto bros. Have enjoyable, bask within the potentialities these wondrous instruments unlock, however don’t take their info at face worth. It’s truthy, not reliable.

Editor’s notice: This article initially revealed on April 7, 2023, however was up to date on May 12 after Google introduced plans to place AI solutions on the prime of search outcomes.