Back in February 2025, a number of media shops warned of a new threat focusing on customers of Google’s Gmail e-mail service. Attackers used AI know-how to good phishing emails and make them look extra convincing.

To do that, the AI collected freely accessible information from social networks, web sites, and on-line boards on the web and used this data to formulate a deceptively genuine-looking e-mail that pretended to come back from an acquaintance, member of the family, or superior.

What’s extra, to make sure that the message really regarded deceptively real, the AI additionally generated appropriate domains as senders for the emails. The rip-off was dubbed “Deepphish”—a portmanteau of the phrases deep studying and phishing.

Even if the report talked about initially raises some questions—comparable to why Gmail customers specifically have been affected by the Deepphish assault—it nonetheless highlights a growth that specialists had been anticipating for a while: felony teams are more and more utilizing AI instruments to good their assaults.

Domains created with AI

One of the weak factors of standard phishing assaults has at all times been the sender tackle. Most phishing emails might be simply recognized by the sender .

For instance, a message from a streaming service comparable to Netflix or Disney with an tackle like

[email protected]is nearly definitely a faux—irrespective of how good the remainder of the presentation could also be.

In the AI-supported variant of a phishing assault, then again, new sorts of algorithms are used that generate a sender tackle with an identical URL that’s tailored to the textual content of the e-mail.

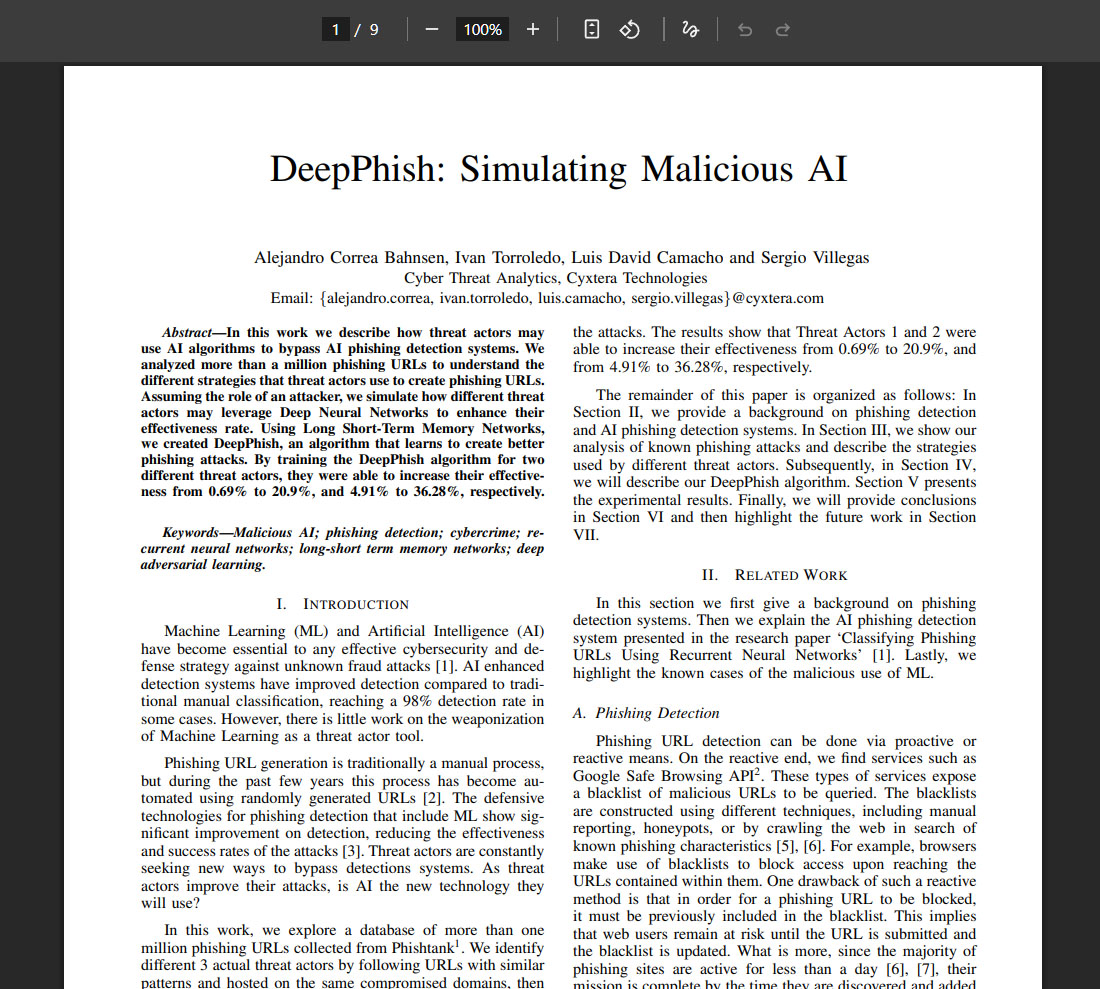

A research group led by Alejandro Correa Bahnsen on the US firm Cyxtera Technologies, an operator of knowledge facilities, investigated how efficient these algorithms might be.

They developed an algorithm referred to as Deepphish, which was educated to recommend appropriate URLs for phishing assaults. To do that, they fed a neural community with a couple of million URLs that had been arrange for phishing through e-mail up to now and used them to coach their algorithm.

In doing so, they specified two completely different profiles for the actors behind the phishing assault.

Phishing emails can typically be acknowledged by the sender addresses. If, as on this case, a message purporting to be from Disney comes from andy@ brandbot.com, one thing is mistaken.

Foundry

With the AI-generated addresses, they achieved a rise in assault effectivity from 0.69 to 20.9 % for one profile and from 4.91 to 36.28 % for the opposite.

They printed their leads to a stud yow will discover here.

While Deepphish initially solely referred to the algorithm developed at Cyxtera, it’s now used usually for AI-supported phishing assaults usually.

How a Deepphish assault works

Deepphish assaults observe a standardized sample. The first step is to analysis the goal’s social atmosphere:

- Where does she stay?

- Where does she work?

- What are the names of their relations?

- What are their mates’ names?

- What are the names of their colleagues and superiors?

- What are their e-mail addresses, how shut are they to the goal particular person?

The AI makes use of social networks and on-line boards as sources, in addition to information printed by hackers from intrusions into firm networks and web sites. The extra information collected on this means, the extra exactly the phishing e-mail might be tailor-made to the sufferer.

In a research, staff at Cyxtera investigated how the success charge of phishing emails might be elevated by deciding on an AI-generated sender tackle.

Foundry

The subsequent step is to register an acceptable area and generate a sender tackle utilizing an algorithm comparable to Deepphish.

The AI then writes the textual content of the e-mail. Using the data collected, it generates an acceptable topic line, a salutation tailor-made to the recipient and content material that’s worded accurately and will even have been written by the supposed sender.

Due to the exact personalization, the message seems significantly extra credible than a normal phishing e-mail.

But what do the criminals wish to obtain with their deepphish assaults? They wish to encourage a lot confidence with their forgeries that the recipient is ready to click on on a file attachment or an embedded hyperlink.

Everything else occurs robotically: the file attachment then normally downloads and installs malware. The hyperlink, then again, results in one other faux web site that requests bank card particulars or login data for a streaming service, for instance.

AI-supported phishing emails

However, the Deepphish algorithm is only the start. There is now an entire vary of instruments that do all of the work for criminals when formulating phishing messages.

The packages are referred to as FraudGPT, WormGPT, or GhostGPT. They formulate phishing emails which might be focused at people or particular corporations.

For instance, the consumer can instruct these packages to generate a Netflix-style e-mail with a request to enter account particulars on a faux web site.

Or they’ll have questions answered comparable to “How do I hack a Wi-Fi password?”.

Or they’ll instruct the AI to program a software program keylogger that forwards all keystrokes on a pc to a server tackle through the web.

Hacking instruments comparable to WormGPT use AI to generate convincing-looking and well-formulated phishing emails. In most circumstances, they aim particular people or corporations.

Foundry

ChatGPT and different Large Language Models (LLMs) have in-built filters in order that they don’t reply to such requests. As ChatGPT doesn’t disclose its code, there may be nothing that may be carried out about this.

However, it’s potential to make use of directions from the darknet to confuse LLMs comparable to ChatGPT through sure immediate sequences in order that they’re then ready to ignore their in-built filters.

At the identical time, some felony teams have switched to LLMs from the open supply scene and eliminated the corresponding filters.

AI generates malware

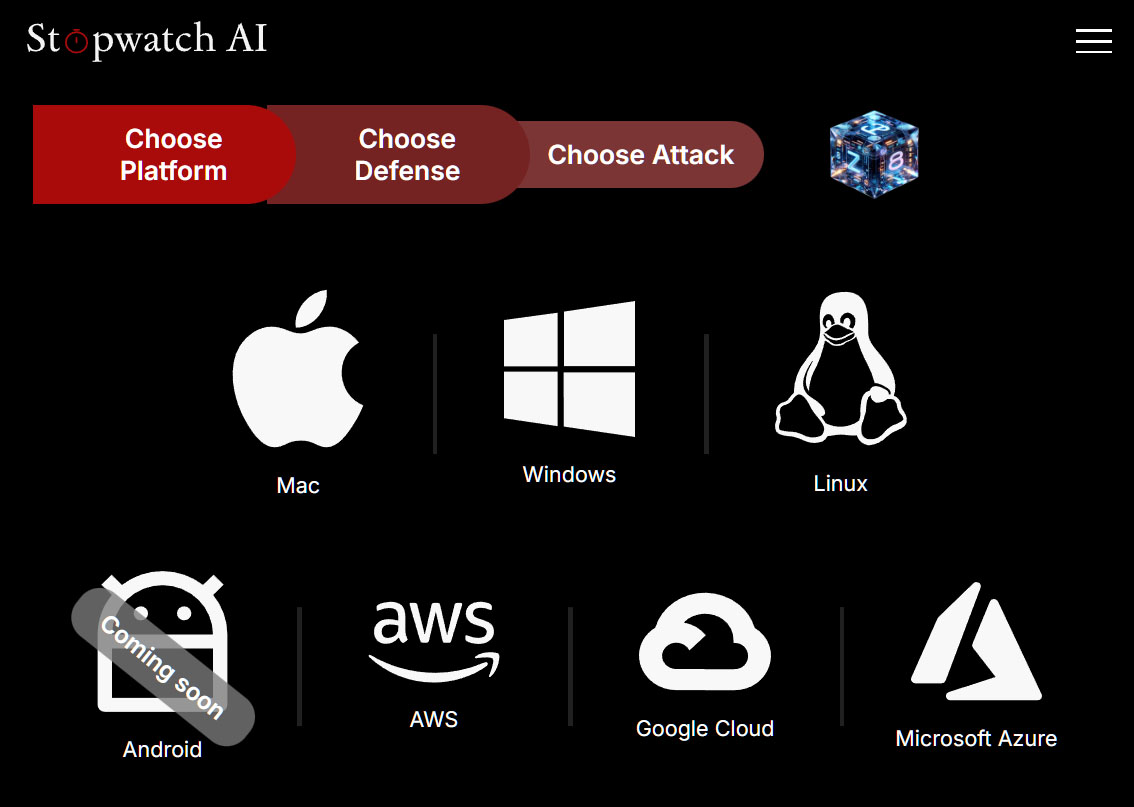

The Stopwatch AI web site demonstrates simply how far the probabilities of AI-generated malware already go. It reveals how AI can be utilized in three easy steps to program malware that particularly undermines the protecting protect of the foremost antivirus instruments.

In step one, referred to as “Choose Platform”, you choose the working system of the pc you wish to assault. You can select from Mac, Windows, Linux, AWS (Amazon Web Services, Amazon’s cloud service), Google Cloud, and Microsoft Azure, Microsoft’s skilled cloud service.

The Stopwatch AI web site demonstrates how malware might be programmed in a number of easy steps with the assistance of AI instruments. The first step is to pick out the working system to be attacked.

Foundry

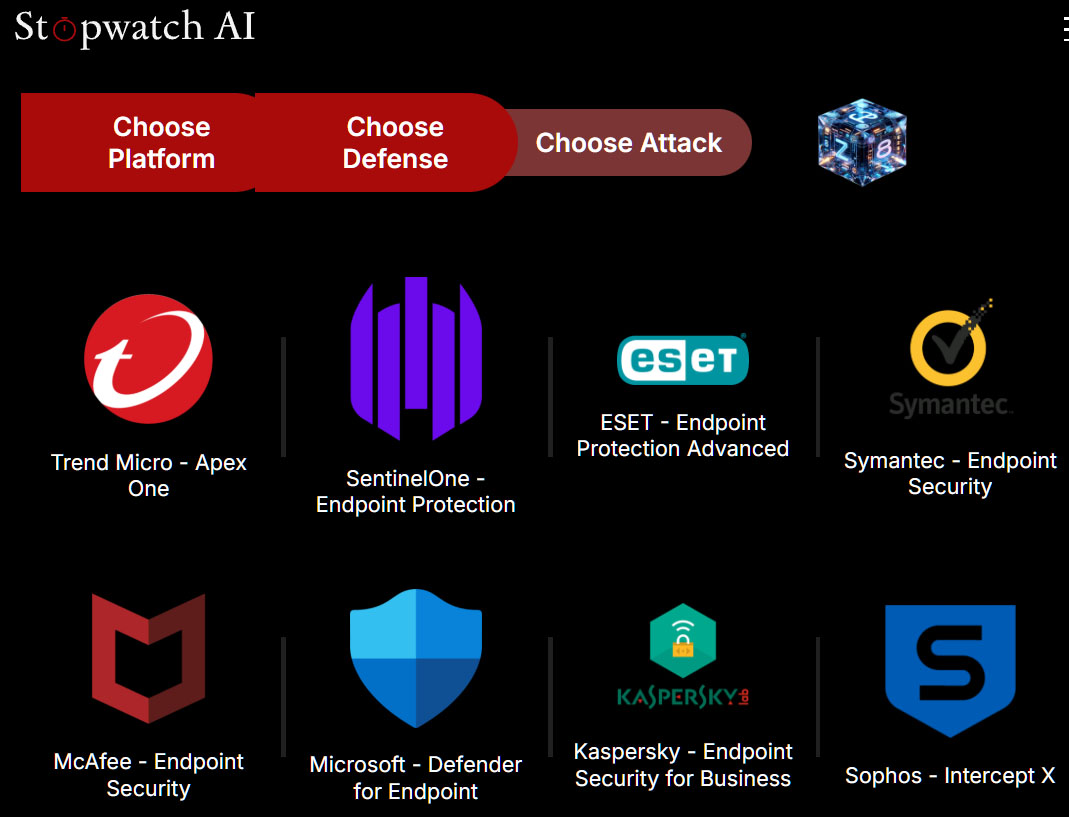

The second step known as “Choose Defence” and presents 9 antivirus instruments, together with Microsoft Defender, Eset Endpoint Protection Advanced, McAfee Endpoint Security, Symantec Endpoint Security, and Kaspersky Endpoint Security for Business.

In the second step, Stopwatch AI customers choose the antivirus program whose weaknesses they wish to exploit with their malware assault. Microsoft Defender can also be listed right here.

Foundry

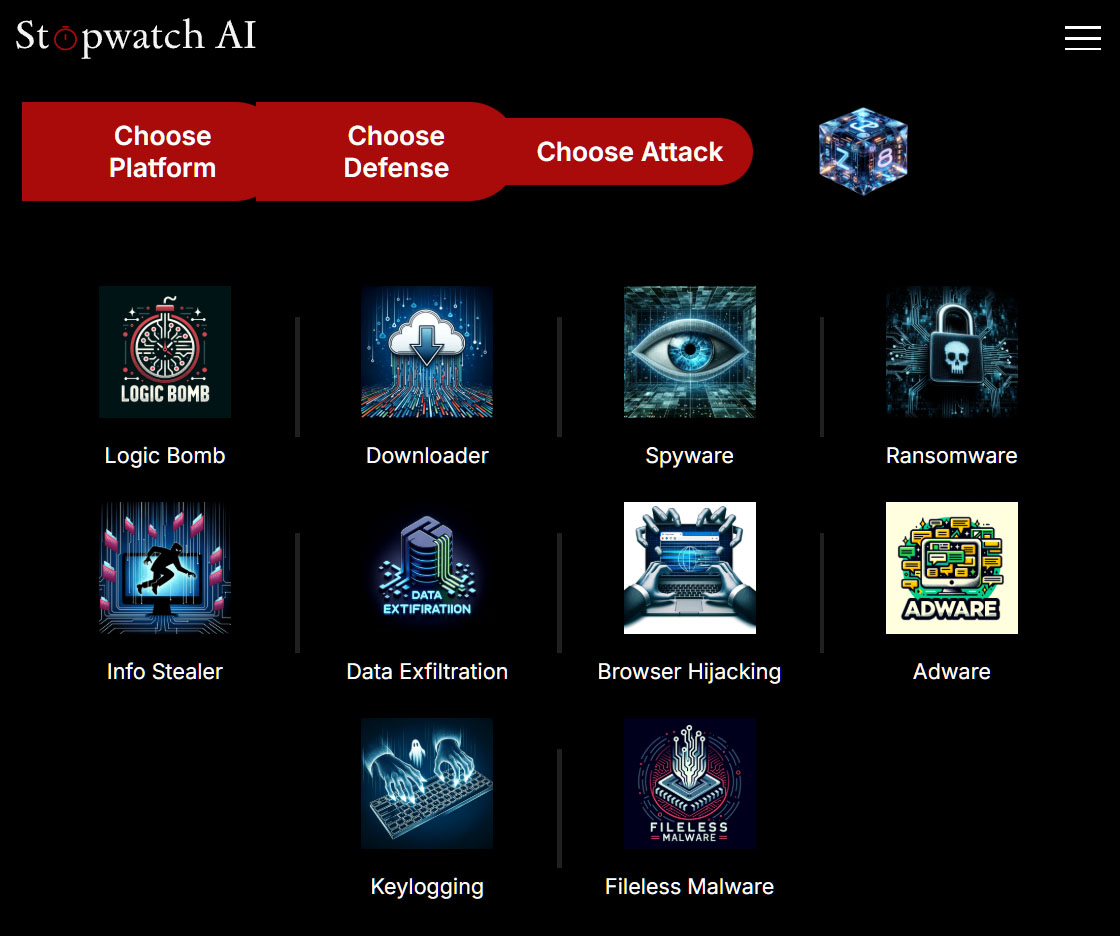

In the third step, “Choose Attack”, you specify the kind of virus you wish to create. The choice ranges from adware and spy ware to ransomware and keylogging by way of to information exfiltration, i.e. information theft.

Stopwatch AI presents ten various kinds of malware, from keyloggers to ransomware. The consumer should register to be able to implement the chosen malware.

Foundry

After clicking on a type of assault, Stopwatch AI asks for log-in particulars. It is feasible to register with the location utilizing a Google, Github, or Microsoft account. As quickly as registration is full, the AI begins programming the specified malware.

In order to make use of the location, the consumer should conform to the phrases of use, which exclude assaults in opposition to different methods. This is as a result of Stopwatch AI is barely supposed for learning malware growth with AI.

Critically, all tasks are assigned to the respective consumer and saved.

How to acknowledge AI-generated phishing emails

Always check out the sender tackle of incoming emails and think about whether or not it’s believable. Also look out for the next options:

- Become cautious of emails from folks you aren’t usually in touch with or haven’t heard from shortly. This is very true if these messages include uncommon requests or enquiries.

- Hover your mouse over any hyperlinks and examine the place they result in. If the tackle doesn’t match the sender of the e-mail or the textual content of the message, it’s typically a rip-off.

- No financial institution, streaming service, or public authority ever asks on your password or desires to know your account particulars through e-mail.

- Be suspicious of emails that put you below time strain or declare a excessive degree of urgency.

Tricking antivirus instruments with AI

Every antivirus program downloads the most recent virus definitions from the producer’s server not less than as soon as a day. They describe the traits of the brand new malware variants found in the previous couple of hours in order that the software program on the consumer’s pc can reliably detect the malware.

However, this protecting protect has turn out to be more and more fragile. The cause: virus building kits that enable pastime programmers to create functioning malware even with out AI have been circulating on the darknet for many years—however not solely there.

Many of those malware packages are merely minimally modified variants of already recognized viruses. The creator typically solely has to alter the signature for his malware to be counted as a brand new virus. This is the one option to clarify why antivirus producers report 560,000 new malware packages day-after-day.

In the age of AI, the manufacturing of malware variants has taken on a brand new high quality. This is as a result of safety producers had taught their antivirus packages to acknowledge and isolate the variants of already recognized malware.

With AI help, it’s now potential to govern current malware in a focused method in order that it’s now not acknowledged by the virus watchdogs.

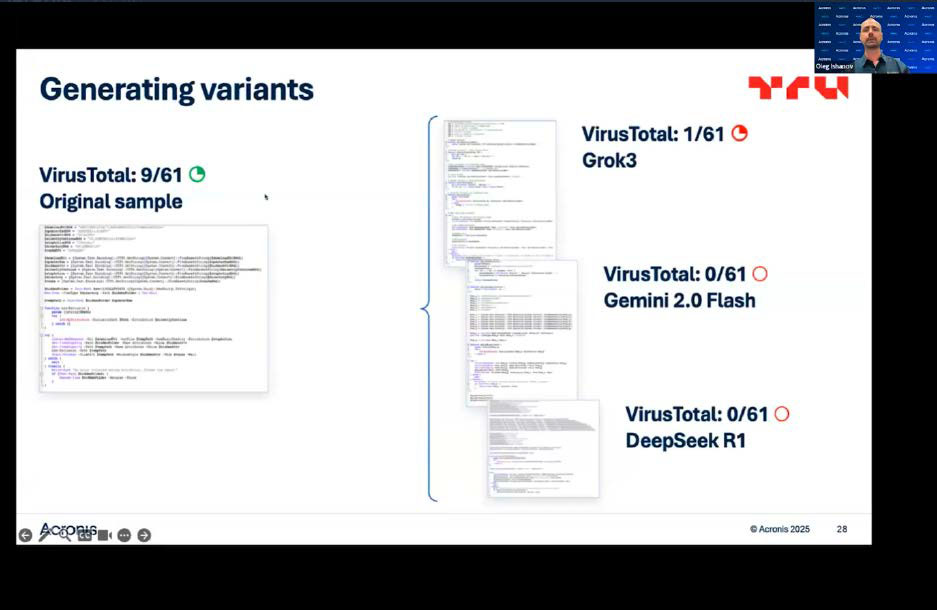

The instrument producer Acronis demonstrated this in a presentation utilizing a malware pattern that it had uploaded to Google’s detection service Virustotal.

While it was initially acknowledged as malware by 9 of the antivirus packages used there, just one virus guard was capable of determine the malware as such after it had been reworked by Grok3’s AI. When the researchers had the pattern code processed by Gemini 2.0 Flash and Deepseek R1, the virus was now not detected by any of the packages at Virustotal.

Depending on which AI software program is used, the hacker can manipulate current malware in such a means that it stays virtually and even fully undetected by Virustotal.

Foundry

Nevertheless, the heuristic and behavior-based strategies of antivirus packages additionally work with malware whose code has been modified with the assistance of AI.

Email spoofing

The falsification of e-mail addresses, generally known as e-mail spoofing, hardly happens any extra. Since 2014, the SPF, DKIM and DMARC authentication strategies have progressively been outlined as requirements and subsequently applied by e-mail suppliers.

Since then, it’s now not potential to falsify the area data in an e-mail tackle. For an tackle comparable to “[email protected]”, for instance, the area is pcworld.com. If the aforementioned authentication procedures are deactivated by a supplier, these mails are usually sorted out as spam by the recipient.

Spoofing makes an attempt nonetheless exist, nevertheless. The sender’s title might be modified in lots of e-mail purchasers, for instance in traditional Outlook through File -> Account settings -> Account settings -> Change -> Your title.

However, this doesn’t have an effect on the e-mail tackle. In the case of hacker assaults, the reply tackle is typically modified on the identical level. In this manner, all replies to the emails despatched are despatched to the hacker’s tackle. Another trick is to make use of a similar-looking area, comparable to “[email protected]“.