The AI chatbot ChatGPT from Open AI has triggered the hype surrounding generative synthetic intelligence and dominates a lot of the media protection.

However, along with the AI fashions from Open AI, there are different chatbots that deserve consideration. And not like ChatGPT, these are additionally obtainable for native use on the PC and might even be used freed from cost for a vast time frame.

We’ll present you 4 native chatbots that additionally run on older {hardware}. You can discuss to them or create texts with them.

The chatbots introduced right here usually encompass two elements, a entrance finish and an AI mannequin, the big language mannequin.

You determine which mannequin runs within the entrance finish after putting in the device. Operation isn’t troublesome if you recognize the fundamentals. However, a number of the chatbots supply very in depth setting choices. Using these requires professional information. However, the bots may also be operated effectively with the usual settings.

See additionally: What is an AI PC, exactly? We cut through the hype

What native AI can do

What you possibly can count on from an area giant language mannequin (LLM) additionally relies on what you supply it: LLMs want computing energy and lots of RAM to have the ability to reply rapidly.

If these necessities are usually not met, the big fashions won’t even begin and the small ones will take an agonizingly very long time to reply. Things are sooner with a present graphics card from Nvidia or AMD, as most native chatbots and AI fashions can then make the most of the {hardware}’s GPU.

If you solely have a weak graphics card in your PC, the whole lot needs to be calculated by the CPU — and that takes time.

If you solely have 8GB of RAM in your PC, you possibly can solely begin very small AI fashions. Although they’ll present right solutions to a lot of easy questions, they rapidly run into issues with peripheral subjects. Computers that supply 12GB RAM are already fairly good, however 16GB RAM or extra is even higher.

Then even AI fashions that work with 7 to 12 billion parameters will run easily. You can normally acknowledge what number of parameters a mannequin has by its title. At the tip, an addition comparable to 2B or 7B stands for two or 7 billions.

Recommendation on your {hardware}: Gemma 2 2B, with 2.6 billion parameters, already runs with 8GB RAM and with out GPU assist. The outcomes are usually quick and effectively structured. If you want a fair much less demanding AI mannequin, you should use Llama 3.2 1B within the chatbot LM Studio, for instance.

If your PC is provided with lots of RAM and a quick GPU, attempt Gemma 2 7B or a barely bigger Llama mannequin, comparable to Llama 3.1 8B. You can load the fashions through the chatbots Msty, GPT4All, or LM Studio.

Information on the AI fashions for the Llama recordsdata could be discovered under. And on your data: ChatGPT from Open AI isn’t obtainable for the PC. The apps and PC instruments from Open AI ship all requests to the web.

The most essential steps

Using the assorted chatbots could be very comparable. You set up the device, then load an AI mannequin through the device after which swap to the chat space of this system. And you’re able to go.

With the Llamafile chatbot, there is no such thing as a have to obtain the mannequin, as an AI mannequin is already built-in within the Llamafile. This is why there are a number of Llamafiles, every with a distinct mannequin.

See additionally: The AI PC revolution: 18 essential terms you need to know

Llamafile

Llamafiles are the only technique to talk with an area chatbot. The goal of the challenge is to make AI accessible to everybody. That’s why the creators pack all the required recordsdata, i.e. the entrance finish and the AI mannequin, right into a single file — the Llamafile.

This file solely must be began and the chatbot can be utilized within the browser. However, the consumer interface isn’t very enticing.

The Llamafile chatbot is obtainable in several variations, every with completely different AI fashions. With the Llava mannequin, you may also combine photos into the chat. Overall, Llamafile is simple to make use of as a chatbot.

IDG

Simple set up

Only one file is downloaded to your pc. The file title differs relying on the mannequin chosen.

For instance, if in case you have chosen the Llamafile with the Llava 1.5 mannequin with 7 billion parameters, the file known as “llava-v1.5-7bq4.llamafile.” As the file extension .exe is lacking right here, you could rename the file in Windows Explorer after downloading.

You can ignore a warning from Windows Explorer by clicking “Yes.” The file title will then be: “llava-v1.5-7b-q4.llamafile.exe.” Double-click on the file to start out the chatbot. On older PCs, it could take a second for the Microsoft Defender Smartscreen to problem a warning.

Click on “Run anyway.” A immediate window opens, however that is just for this system. The chatbot doesn’t have its personal consumer interface, however should be operated within the browser. Start your default browser if it’s not began robotically and enter the tackle 127.0.0.1:8080 or localhost:8080.

If you need to use a distinct AI mannequin, you could obtain a distinct Llamafile. These could be discovered on Llamafile.ai additional down the web page within the “Other example llamafiles” desk. Each Llamafile wants the file extension .exe.

Chatting with the Llamafile

The consumer interface within the browser reveals the setting choices for the chatbot on the prime. The chat enter is positioned on the backside of the web page below “Say something.”

If you might have began a Llamafile with the mannequin Llava (llava-v1.5-7b-q4.llamafile), you can’t solely chat, but additionally have photos defined to you through “Upload Image” and “Send.” Llava stands for “Large Language and Vision Assistant.” To finish the chatbot, merely shut the immediate.

Tip: Llava recordsdata can be utilized in your personal community. Start the chatbot on a robust PC in your house community. Make certain that the opposite PCs are approved to entry this pc. You can then use the chatbot from there through the web browser and the tackle “:8080”. Replace with the tackle of the PC on which the chatbot is working.

Msty

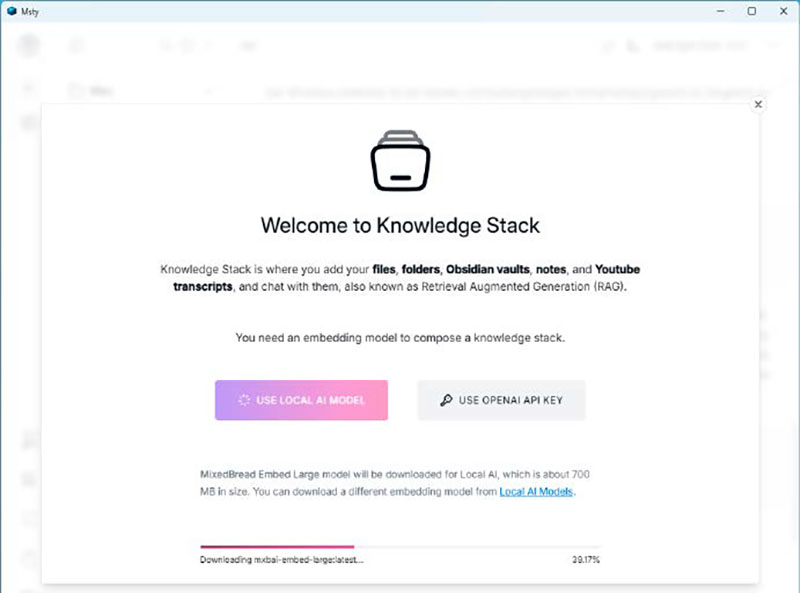

Msty gives entry to many language fashions, good consumer steering, and the import of your personal recordsdata to be used within the AI. Not the whole lot is self-explanatory, however it’s straightforward to make use of after a brief familiarization interval.

If you need to make your personal recordsdata obtainable to the AI purely domestically, you are able to do this in Msty within the so-called Knowledge Stack. That sounds a bit pretentious. However, Msty truly gives the most effective file integration of the 4 chatbots introduced right here.

IDG

Installation of Msty

Msty is obtainable for obtain in two variations: one with assist for Nvidia and AMD GPUs and the opposite for working on the CPU solely. When you begin the Msty set up wizard, you might have the selection between an area set up (“Set up local AI”) or an set up on a server.

For the native set up, the Gemma 2 mannequin is already chosen within the decrease a part of the window. This mannequin is barely 1.6GB in dimension and is effectively suited to textual content creation on weaker {hardware}.

If you click on on “Gemma2,” you possibly can select between 5 different fashions. Later, many extra fashions could be loaded from a clearly organized library through “Local AI Models,” comparable to Gemma 2 2B or Llama 3.1 8B.

“Browse & Download Online Models” provides you entry to the AI pages www.ollama.com and www.huggingface.com and subsequently to many of the free AI fashions.

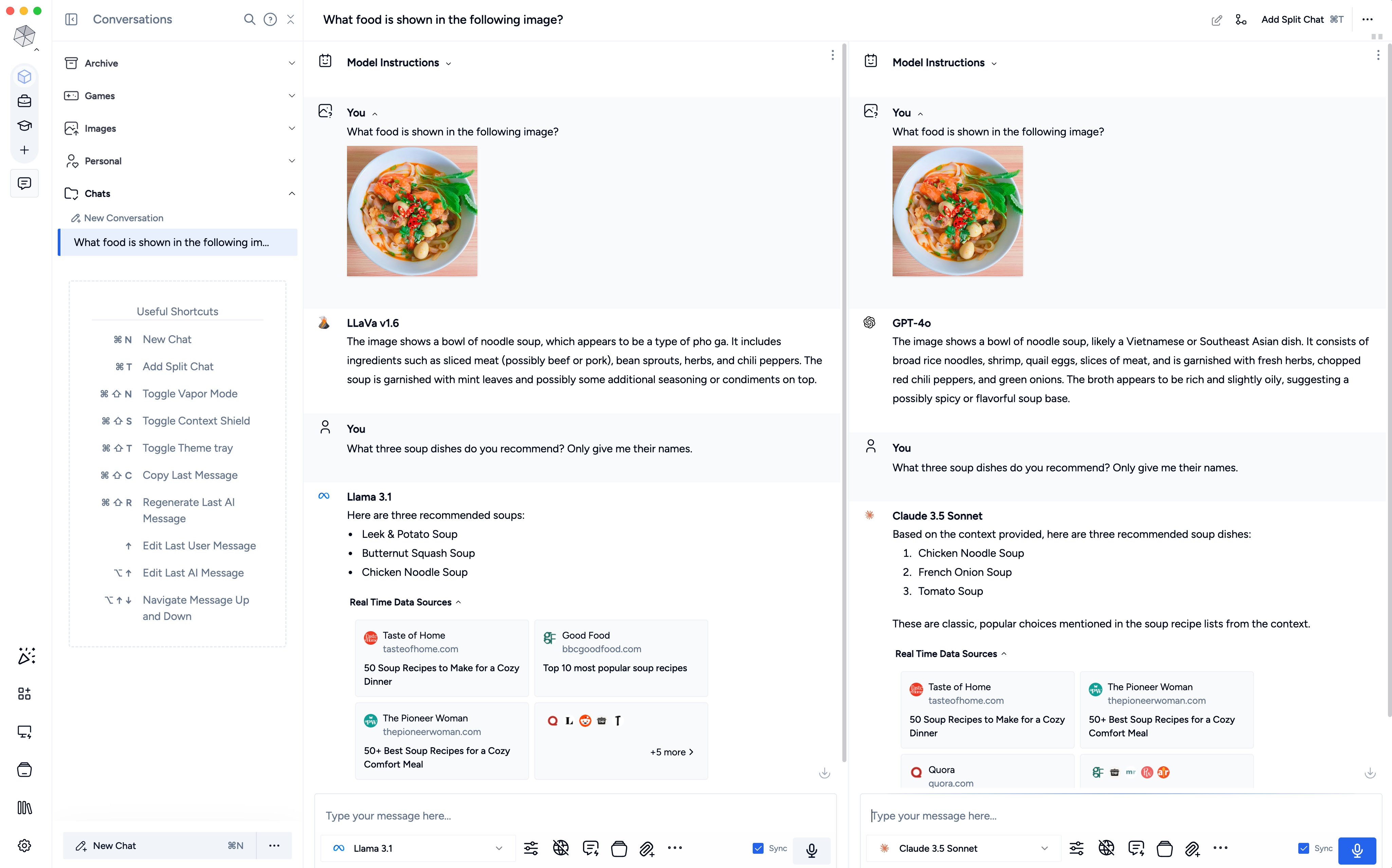

A particular characteristic of Msty is which you can ask a number of AI fashions for recommendation on the identical time. However, your PC ought to have sufficient reminiscence to reply rapidly. Otherwise you’ll have to wait a very long time for the completed solutions.

Msty

Pretty interface, numerous substance

Msty’s consumer interface is interesting and effectively structured. Of course, not the whole lot is instantly apparent, but when you become familiar with Msty, you should use the device rapidly, combine new fashions, and combine your personal recordsdata. Msty offers entry to the various, usually cryptic choices of the person fashions, at the least partially in graphical menus.

In addition: Msty gives so-called splitchats. The consumer interface then shows two or extra chat entries subsequent to one another. A distinct AI mannequin could be chosen for every chat. However, you solely should enter your query as soon as. This lets you examine a number of fashions with one another.

Add your personal recordsdata

You can simply combine your personal recordsdata through “Knowledge Stacks.” You can select which embedding mannequin ought to put together your information for the LLMs.

Mixedbread Embed Large is utilized by default. However, different embedding instruments may also be loaded. Care must be taken when deciding on the mannequin, nevertheless, as on-line embedding fashions may also be chosen, for instance from Open AI.

However, which means your information is shipped to Open AI’s servers for processing. And the database created along with your information can be on-line: Every enquiry then additionally goes to Open AI.

Chat with your personal recordsdata: After you might have added your personal paperwork to the “Knowledge Stacks,” choose “Attach Knowledge Stack and Chat with them” under the chat enter line. Tick the field in entrance of your stack and ask a query. The mannequin will search by way of your information to seek out the reply. However, this doesn’t work very effectively but.

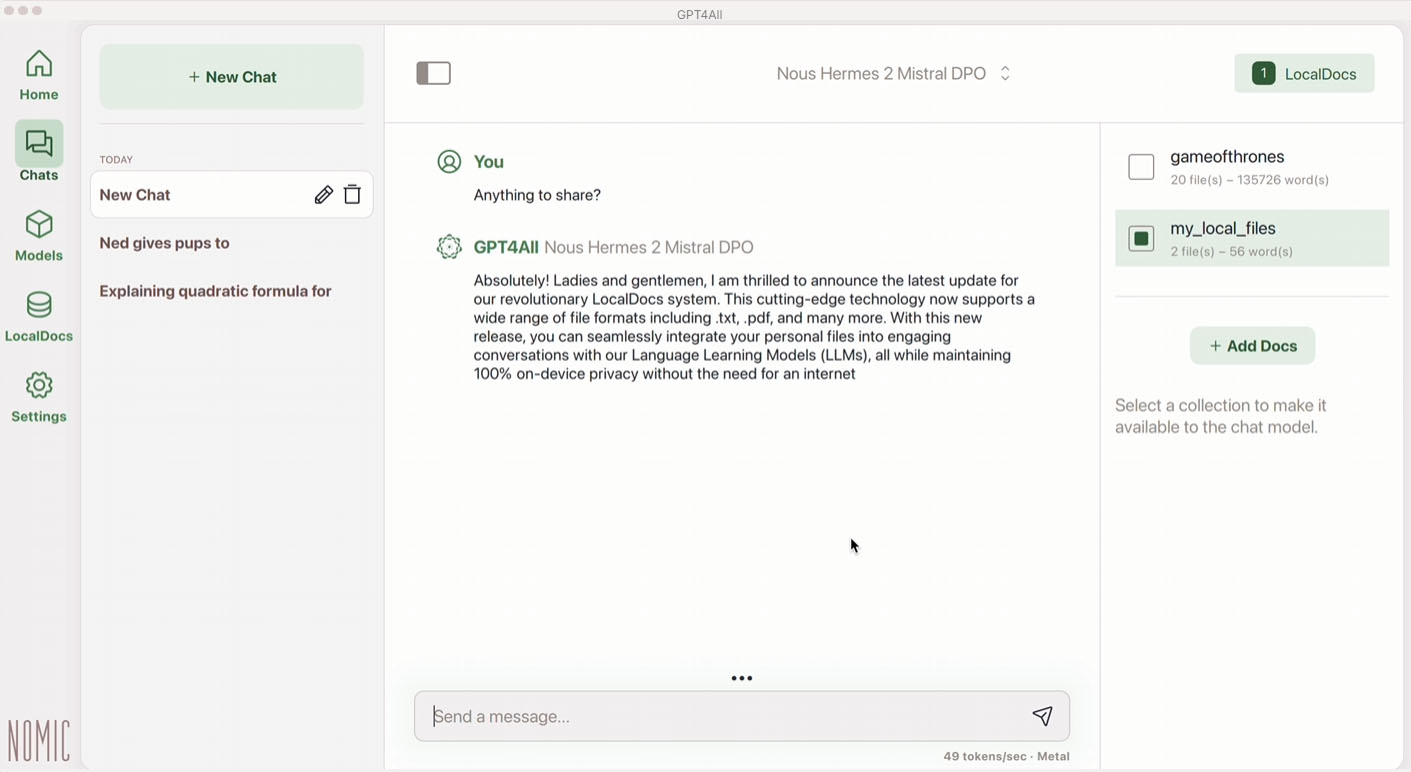

GPT4All

GPT4All gives a couple of fashions, a easy consumer interface and the choice of studying in your personal recordsdata. The choice of chat fashions is smaller than with Msty, for instance, however the mannequin choice is clearer. Additional fashions could be downloaded through Huggingface.com.

The GPT4All chatbot is a stable entrance finish that gives a wide variety of AI fashions and might load extra from Huggingface.com. The consumer interface is effectively structured and you’ll rapidly discover your approach round.

GPT4All

Installation: Quick and simple

The set up of GPT4All was fast and simple for us. AI fashions could be chosen below “Models.” Models comparable to Llama 3 8B, Llama 3.2 3B, Microsoft Phi 3 Mini, and EM German Mistral are introduced.

Good: For every mannequin, the quantity of free RAM the PC will need to have for the mannequin to run is specified. There can be entry to AI fashions at Huggingface.com utilizing the search perform. In addition, the net fashions from Open AI (ChatGPT) and Mistral could be built-in through API keys — for many who don’t simply need to chat domestically.

Operation and chat

The consumer interface of GPT4All is much like that of Msty, however with fewer features and choices. This makes it simpler to make use of. After a brief orientation section, during which it’s clarified how fashions could be loaded and the place they are often chosen for the chat, operation is simple.

Own recordsdata could be made obtainable to the AI fashions through “Localdocs.” In distinction to Msty, it’s not attainable to set which embedding mannequin prepares the info. The Nomic-embed-textv1.5 mannequin is utilized in all instances.

In our assessments, the device ran with good stability. However, it was not all the time clear whether or not a mannequin was already absolutely loaded.

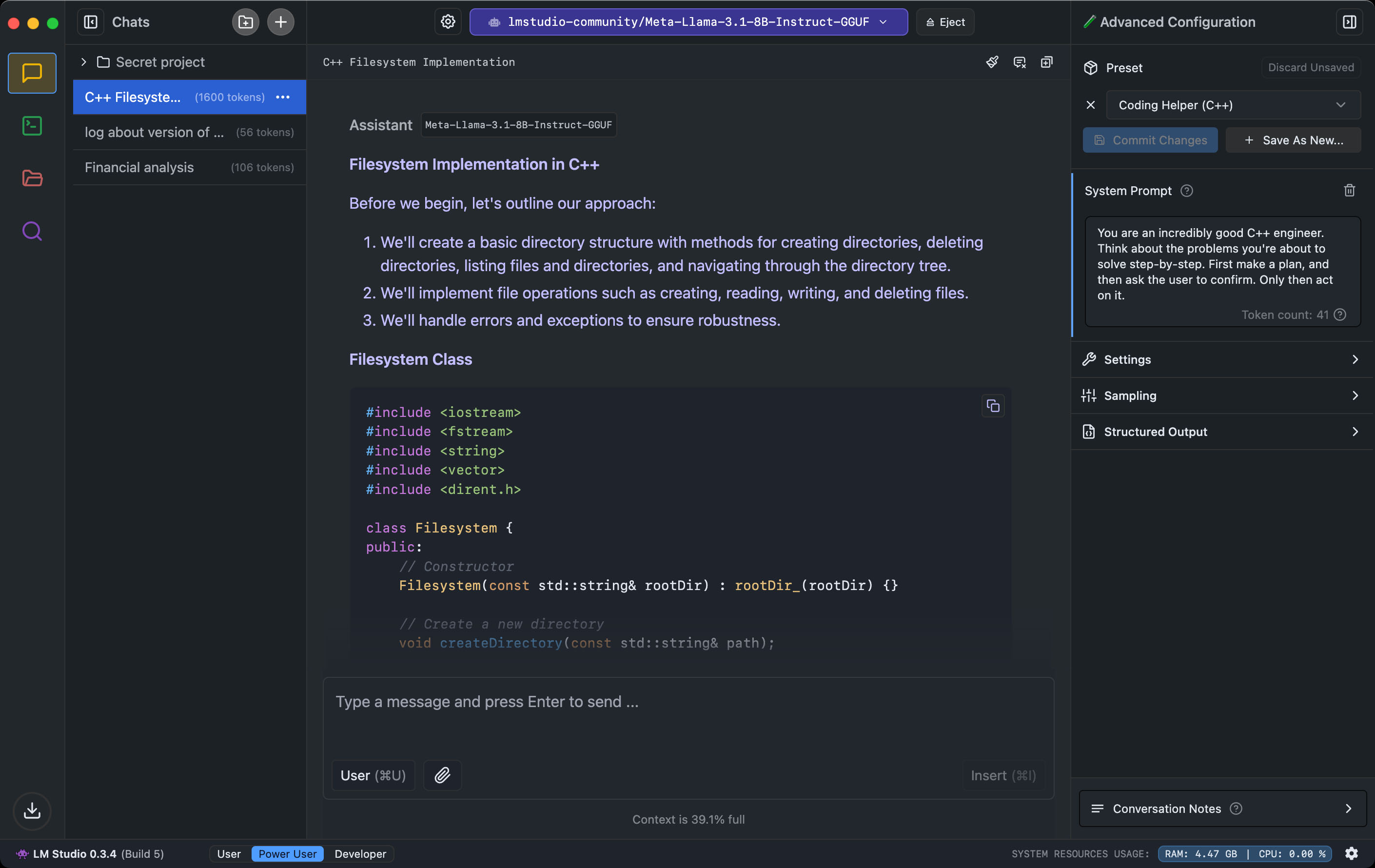

LM Studio

LM Studio gives consumer steering for freshmen, superior customers, and builders. Despite this categorization, it’s aimed extra at professionals than freshmen. What the professionals like is that anybody working with LM Studio not solely has entry to many fashions, but additionally to their choices.

The LM Studio chatbot not solely provides you entry to a big choice of AI fashions from Huggingface.com, but additionally lets you fine-tune the AI fashions. There is a separate developer view for this.

LM Studio

Straightforward set up

After set up, LM Studio greets you with the “Get your first LLM” button. Clicking on it gives a really small model of Meta’s LLM: Llama 3.2 1B.

This mannequin also needs to run on older {hardware} with out lengthy ready instances. After downloading the mannequin, it should be began through a pop-up window and “Load Model.” Additional fashions could be added utilizing the Ctrl-Shift-M key mixture or the “Discover” magnifying glass image, for instance.

Chat and combine paperwork

At the underside of the LM Studio window, you possibly can change the view of this system utilizing the three buttons “User,” “Power User,” and “Developer.”

In the primary case, the consumer interface is much like that of ChatGPT within the browser; within the different two instances, the view is supplemented with further data, comparable to what number of tokens are contained in a response and the way rapidly they had been calculated.

This and the entry to many particulars of the AI fashions make LM Studio significantly attention-grabbing for superior customers. You could make many nice changes and examine data.

Your personal texts can solely be built-in right into a chat, however can’t be made completely obtainable to the language fashions. When you add a doc to your chat, LM Studio robotically decides whether or not it’s brief sufficient to suit utterly into the AI mannequin’s immediate or not.

If not, the doc is checked for essential content material utilizing Retrieval Augmented Generation (RAG), and solely this content material is offered to the mannequin within the chat. However, the textual content is usually not captured in full.

This article initially appeared on our sister publication PC-WELT and was translated and localized from German.