The scale of supercomputing has grown nearly too massive to grasp, with tens of millions of compute models performing calculations at charges requiring, for first time, the exa prefix — denoting quadrillions per second. How was this achieved? With cautious planning… and a number of wires, say two individuals near the mission.

Having famous the information that Intel and Argonne National Lab had been planning to take the wrapper off a brand new exascale pc known as Aurora (one in all a number of being constructed within the U.S.) earlier this 12 months, I just lately obtained an opportunity to speak with Trish Damkroger, head of Intel’s Extreme Computing Organization, and Rick Stevens, Argonne’s affiliate lab director for computing, atmosphere and life sciences.

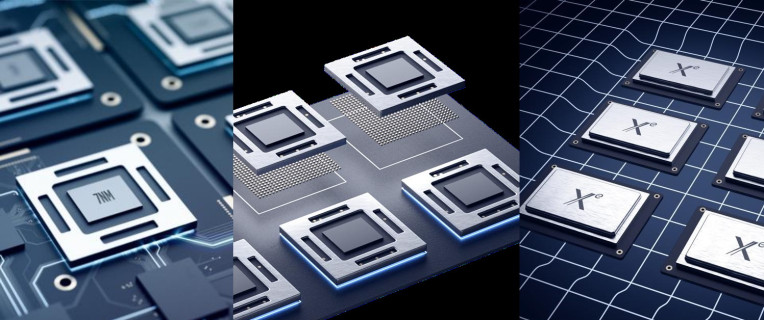

The two mentioned the technical particulars of the system on the Supercomputing convention in Denver, the place, most likely, the general public who can actually say they perceive such a work already had been. So when you can learn at business journals and the press launch in regards to the nuts and bolts of the system, together with Intel’s new Xe structure and Ponte Vecchio general-purpose compute chip, I attempted to get a bit extra of the massive image from the 2.

It ought to shock nobody that this can be a mission lengthy within the making — however you won’t guess precisely how lengthy: greater than a decade. Part of the problem, then, was to ascertain computing {hardware} that was leagues past what was attainable on the time.

“Exascale was first being started in 2007. At that time we hadn’t even hit the petascale target yet, so we were planning like three to four magnitudes out,” mentioned Stevens. “At that time, if we had exascale, it would have required a gigawatt of power, which is obviously not realistic. So a big part of reaching exascale has been reducing power draw.”

Intel’s supercomputing-focused Xe structure is predicated on a 7-nanometer course of, pushing the very fringe of Newtonian physics — a lot smaller and quantum results begin coming into play. But the smaller the gates, the much less energy they take, and microscopic financial savings add up rapidly while you’re speaking billions and trillions of them.

But that merely exposes one other downside: If you enhance the ability of a processor by 1000x, you run right into a reminiscence bottleneck. The system might be able to assume quick, but when it will possibly’t entry and retailer knowledge equally quick, there’s no level.

“By having exascale-level computing, but not exabyte-level bandwidth, you end up with a very lopsided system,” mentioned Stevens.

And when you clear each these obstacles, you run into a 3rd: what’s known as concurrency. High efficiency computing is equally about synchronizing a process between big numbers of computing models as it’s about making these models as highly effective as attainable. The machine operates as an entire, and as such each half should talk with each different half — which turns into one thing of an issue as you scale up.

“These systems have many thousands of nodes, and the nodes have hundreds of cores, and the cores have thousands of computation units, so there’s like, billion-way concurrency,” Stevens defined. “Dealing with that is the core of the architecture.”

How they did it, I, being totally unfamiliar with the vagaries of excessive efficiency computing structure design, wouldn’t even try to clarify. But they appear to have carried out it, as these exascale techniques are coming on-line. The answer, I’ll solely enterprise to say, is actually a serious advance on the networking facet. The degree of sustained bandwidth between all these nodes and models is staggering.

Making exascale accessible

While even in 2007 you would predict that we’d ultimately attain such low-power processes and improved reminiscence bandwidth, different tendencies would have been almost inconceivable to foretell — for instance, the exploding demand for AI and machine studying. Back then it wasn’t even a consideration, and now it could be folly to create any type of excessive efficiency computing system that wasn’t not less than partially optimized for machine studying issues.

“By 2023 we expect AI workloads to be a third of the overall HPC server market,” mentioned Damkroger. “This AI-HPC convergence is bringing those two workloads together to solve problems faster and provide greater insight.”

To that finish the structure of the Aurora system is constructed to be versatile whereas retaining the flexibility to speed up sure frequent operations, as an illustration the kind of matrix calculations that make up quite a lot of sure machine studying duties.

“But it’s not just about performance, it has to be about programmability,” she continued. “One of the big challenges of an exacale machine is being able to write software to use that machine. oneAPI is going to be a unified programming model — it’s based on an open standard of Open Parallel C++, and that’s key for promoting use in the community.”

Summit, as of this writing probably the most highly effective single computing system on this planet, could be very dissimilar to most of the techniques builders are used engaged on. If the creators of a brand new supercomputer need it to have broad attraction, they should convey it as near being like a “normal” pc to function as attainable.

“It’s something of a challenge to bring x86-based packages to Summit,” Stevens famous. “The big advantage for us is that, because we have x86 nodes and Intel GPUs, this thing is basically going to run every piece of software that exists. It’ll run standard software, Linux software, literally millions of apps.”

I requested in regards to the prices concerned, because it’s one thing of a thriller with a system like this how {that a} half-billion greenback funds will get damaged down. Really I simply thought it could be attention-grabbing to know the way a lot of it went to, say, RAM versus processing cores, or what number of miles of wire they needed to run. Though each Stevens and Damkroger declined to remark, the previous did observe that “the backlink bandwidth on this machine is many times the total of the entire internet, and that does cost something.” Make of that what you’ll.

Aurora, in contrast to its cousin El Capitan at Lawrence Livermore National Lab, won’t be used for weapons improvement.

“Argonne is a science lab, and it’s open, not classified science,” mentioned Stevens. “Our machine is a national user resource; We have people using it from all over the country. A large amount of time is allocated via a process that’s peer reviewed and priced to accommodate the most interesting projects. About two thirds is that, and the other third Department of Energy stuff, but still unclassified problems.”

Initial work will probably be in local weather science, chemistry, and knowledge science, with 15 groups between them signed up for main initiatives to be run on Aurora — particulars to be introduced quickly.