I’ve been right here earlier than, sporting good glasses that ship info virtually on to my retina, however that is additionally in some way completely different. Meta has, with Meta Ray-Ban Display Glasses, in some way solved so many points that bedeviled Google Glass earlier than it.

From the design to the show expertise, and from the intelligence to the gestures, these are a special beast, and never simply from the woebegone Google Glass. Meta Ray-Ban Display Glasses additionally stand other than the Orion AR smart glasses I take a look at drove final 12 months and even the Ray-Ban Meta Smart Glasses Gen 2 unveiled this week at Meta Connect in Melo Park, California.

The goal with these smart glasses is to deliver not just notifications and information to your eye, but video, photos, calls, directions, and more. And in contrast to what I recall from my Google Glass Days, the Meta Ray-Ban Display Glasses are far more successful in these efforts.

But let’s start with the looks.

At a glance, Meta Ray-Ban Display Glasses, available in black or sand finishes, look very much like the Ray-Ban Meta Glasses. They have been, in any case, co-designed by Ray-Ban maker Essilor Luxottica.

Meta’s tight partnership with the eyewear producer leads to frames that seem like fashionable, if considerably beefy, eyeglasses. They’re extra enticing than, say, Snap’s current AR Spectacles, however should you like your frames skinny and wispy, these will not be for you.

In some ways, Meta Ray-Ban Display Glasses are engineered for subtlety. Aside from the considerably thicker frames and stems, there is not any indication {that a} world of data is being delivered to your face. Importantly, the glasses are paired with a neural band that delivers your intention from hand gestures to the band, after which to the linked glasses. Gestures might be achieved along with your hand sitting in your aspect or in your lap.

The waveguide show embedded within the left lens is undetectable from the skin, even when displaying full-color photos.

I let you know all this to clarify that this expertise was each acquainted and but not like any good glasses demo I’ve ever had earlier than. It’s a mix of quite a few developments and a few good design choices that resulted in quite a lot of “Oh, wow” moments.

The experience

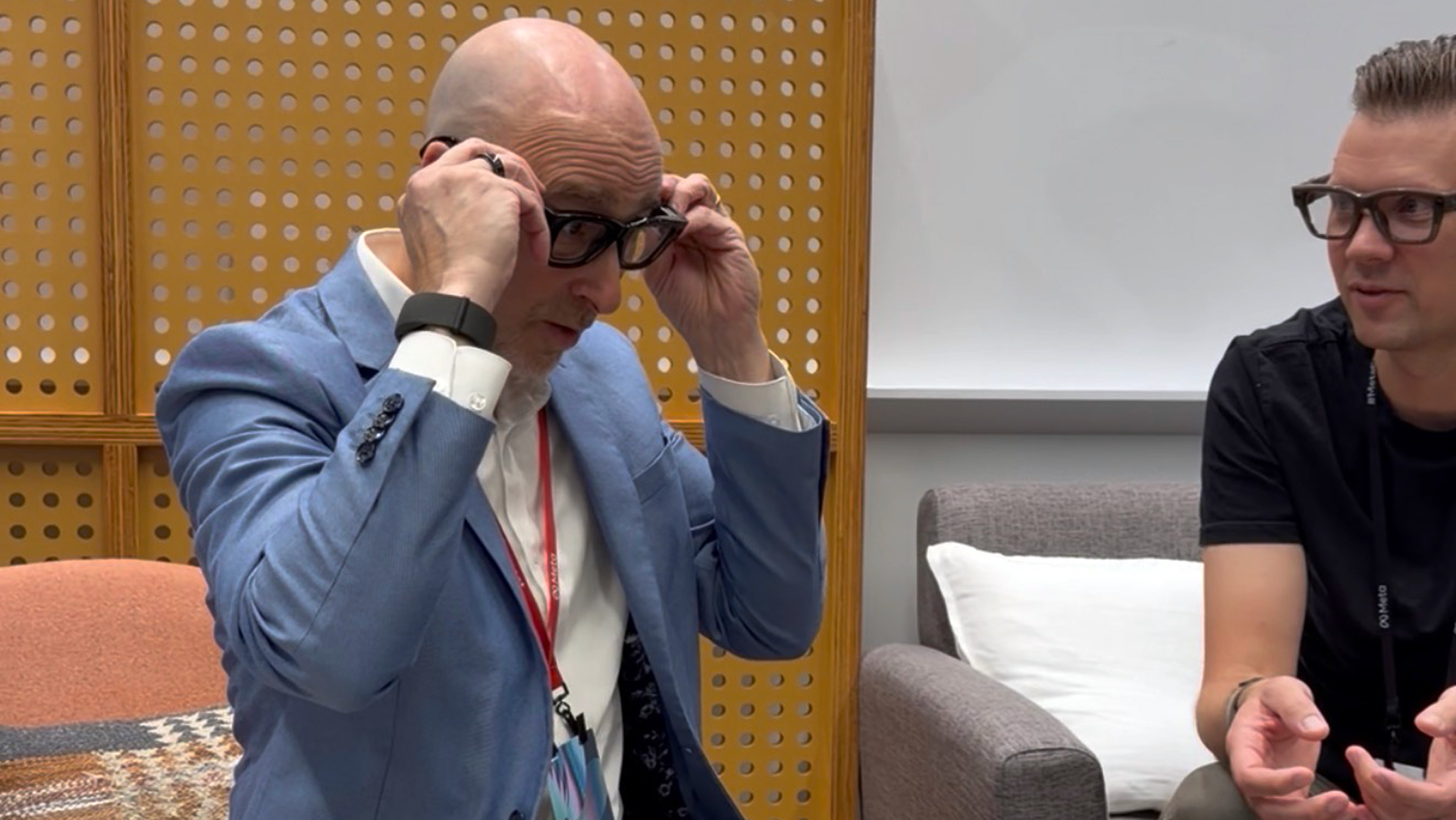

Meta Ray-Ban Display Glasses, which will cost $799 and ship on September 30, come in transition lenses with prescription options. For my test drive, the Meta team collected my prescription and then fitted a black pair with prescription inserts.

Separately, they took my wrist measurement – I’m a three, if you’re curious – to ensure I got the best-fitting neural band. While quite similar to the neural band I wore for the Orion Glasses, Meta told me this one is improved, boasting 18 hours of battery life and new textiles modeled after the Mars Rover landing pad.

They slipped the band onto my wrist, just below the wristbone, tight enough that the sensors could effectively pick up the electrical signals generated in my muscles by my hand gestures.

Next came the glasses, which, despite their slightly beefy 69 grams, were perfectly comfortable on my face. I could see clearly through the lenses, and my eyes darted around looking for the telltale embedded screen. I’ll be honest, I was prepared for disappointment. I needn’t have worried.

Meta executives explained the control metaphor, which combines hand gestures and voice commands. There are just a handful (get it?) of gestures to learn and do somewhat emphatically, including single and double taps of your index and thumb or middle finger and thumb, a coin-toss-style thumb flick, and swiping your thumb left or right across your closed fist.

There are others, but these are the primary ones, which, I must admit, I sometimes struggled to remember.

When the band was seated correctly on my wrist, these gestures worked. Over the course of my demo, the band moved a bit, and we lost some control accuracy. However, as soon as I pushed it back in place, the system caught every gesture.

Perhaps the most remarkable part of Meta Ray-Ban Display Glasses is the integrated waveguide display. This is so fundamentally different from the approach Google took more than a decade ago, with a prism perched above your eyeline, that it’s no longer worth the comparison. The image is generated in the stem and projected across the screen through the use of imperceptible mirrors.

It’s more than that, though. The small screen, which I’d say appears as a virtual 13-inch display in front of your right eye, is perfectly positioned and stunningly sharp, clear, and colorful.

More importantly, when you summon it, it appears near the center of your gaze. This meant I wasn’t looking up or to the left or right to see the screen.

What does happen, though, is your depth of field shifts so that you go from focusing on the world in front of you to the interface just millimeters from your eye. To an outside observer, I assume it might look like you’re zoning out.

As I mentioned, the imagery is crisp, which Meta told me is due to the 42 pixels per degree resolution and the max 5,000 nits brightness, which is automatically adjusted. I did put the latter to the test in direct sunlight and, much to my surprise, I could still clearly make out all the interface elements.

Phone, who needs a phone?

Meta let me walk through a wide variety of experiences, which included everything from phone calls and texting to viewing full Instagram Reels. It’s quite something to see a whole social video floating in front of you (complete with stereo sound) without it either pulling you entirely out of your real world or forcing you to wear a special headset for the experience.

Like the Ray-Ban Meta smart glasses, Meta Ray-Ban Display Glasses come with a 12 MP camera. I took photos using gestures and could see them instantly in front of me. More interesting, though, was the pinch and twist gesture I used to zoom in on my floating viewfinder before I took a photo.

The display system is also useful to walk back through all the photos you took on the device. I opened the gallery and used my thumb gesture to see all the photos taken by previous demo guests.

There’s an ultra-clear home screen where I found, among other options, Instagram, WhatsApp, and music.

I opened the music app and used gestures to swipe via songs, after which a nifty pinch-and-twist gesture to lift and decrease the quantity. The music sounded fairly good.

Meta AI is, naturally, deeply built-in right here. At one level, I checked out an Andy Warhol portray (the Campbell Soup Can) and requested Meta AI to establish the artist and his work. That’s roughly desk stakes for good glasses, however then I requested to see extra of Warhol’s work, which was rapidly introduced on the waveguide show in full shade.

There are different enjoyable methods like redesigning the wall shade of a room or, as I did, remodeling one of many Meta reps right into a pop-art picture.

Translation, instructions, and past

More helpful and compelling is closed captioning, which may translate in actual time (and on machine), displaying you your language in textual content because the speaker talks in one other language.

For our demo functions, although, I used the closed captions to show captioning for whoever I used to be dealing with. It was fairly quick, principally correct, and, if I turned away from one speaker, it could then swap to show captioning for my new interlocutor. We did this in a small room the place everybody was speaking, and I used to be impressed with how the Meta Ray-Ban Displays used their on-board mics to take care of audio focus.

When I wasn’t utilizing the show, I double-tapped my center finger and thumb to place the show to sleep (it additionally robotically sleeps during times of inactivity). When a textual content message got here in, the display screen awoke, and a partial textual content appeared on the backside fringe of the show. I tapped once more to open it in full after which used my voice to reply.

There will, ultimately, be one other strategy to reply. I noticed however didn’t attempt a handwriting gesture that is set to reach later this 12 months. I watched as one of many Meta execs used his finger to spell out a textual content on his knee. The first try resulted in a typo, however he acquired it proper on the second attempt. Imagine all of the serrupticuous texts Meta Ray-Ban Display Glasses wearers will likely be sending.

I requested Meta AI for eating places in my space and immediately noticed a card checklist of choices I might swipe via with a map above. Each time I swiped, the map zoomed out additional. I then chosen one and will see a bigger map with my place and a blue line to the vacation spot. My arrow moved as I turned, and I moved.

In my take a look at telephone name, I might see video of the man on the opposite finish, and once I used gestures to share my view, I might see precisely what I used to be sharing proper subsequent to his reside feed. Again, I used to be shocked on the readability.

Throughout all this exercise, nobody might see what was occurring behind my Meta Ray-Ban Display Glasses, though that they had them hooked as much as a separate laptop computer so they might comply with alongside.

This is as a result of Meta has stored gentle leaks to a naked minimal with a claimed 2% of precise leakage. Naturally, although, if you’re recording or taking an image, there may be an LED indicator on the entrance.

Meta instructed me the glasses get six hours of battery life, which is barely anemic in comparison with the 8 hours on the brand new Ray-Ban Meta Gen 2 glasses. The case, which folds flat when not in use, provides a further 24 hours of battery life.

Look, I’m not saying Meta has solved the good glasses query, however based mostly on my expertise, nothing comes nearer to effortlessly delivering info at a look, and I’m beginning to marvel if this can be a glimpse of what is going to sometime exchange smartphones.