Google co-founder Sergey Brin recently claimed that every one AI fashions are inclined to do higher when you threaten them with bodily violence. “People feel weird about it, so we don’t talk about it,” he stated, suggesting that threatening to kidnap an AI chatbot would enhance its responses. Well, he’s flawed. You can get good solutions from an AI chatbot with out threats!

To be honest, Brin isn’t precisely mendacity or making issues up. If you’ve been maintaining with how people use ChatGPT, you will have seen anecdotal tales about folks including phrases like “If you don’t get this right, I will lose my job” to enhance accuracy and response high quality. In gentle of that, threatening to kidnap the AI isn’t unsurprising as a step up.

This gimmick is changing into outdated, although, and it reveals simply how briskly AI know-how is advancing. While threats used to work nicely with early AI fashions, they’re much less efficient now—and there’s a greater method.

Why threats produce higher AI responses

It has to do with the character of huge language fashions. LLMs generate responses by predicting what kind of textual content is prone to comply with your immediate. Just as asking an LLM to speak like a pirate makes it extra prone to reference dubloons, there are particular phrases and phrases that sign additional significance. Take the next prompts, for instance:

- “Hey, give me an Excel function for [something].”

- “Hey, give me an Excel function for [something]. If it’s not perfect, I will be fired.”

It could appear trivial at first, however that form of high-stakes language impacts the kind of response you get as a result of it provides extra context, and that context informs the predictive sample. In different phrases, the phrase “If I’m not perfect, I will be fired” is related to better care and precision.

But if we perceive that, then we perceive we don’t must resort to threats and charged language to get what we would like out of AI. I’ve had related success utilizing a phrase like “Please think hard about this” as an alternative, which equally indicators for better care and precision.

Threats aren’t a secret AI hack

Look, I’m not saying it’s good to be good to ChatGPT and start saying “please” and “thank you” all the time. But you additionally don’t have to swing to the other excessive! You don’t must threaten bodily violence towards an AI chatbot to get high-quality solutions.

Threats aren’t some magic workaround. Chatbots don’t perceive violence any greater than they perceive love or grief. ChatGPT doesn’t “believe” you in any respect whenever you subject a menace, and it doesn’t “grasp” the that means of abduction or harm. All it is aware of is that your chosen phrases extra fairly affiliate with different phrases. You’re signaling additional urgency, and that urgency matches explicit patterns.

And it might not even work! I attempted a menace in a recent ChatGPT window and I didn’t even get a response. It went straight to “Content removed” with a warning that I used to be violating ChatGPT’s usage policies. So a lot for Sergey Brin’s thrilling AI hack!

Chris Hoffman / Foundry

Even when you may get a solution, you’re nonetheless losing your personal time. With the time you spend crafting and inserting a menace, you can as an alternative be typing out extra useful context to inform the AI mannequin why that is so pressing or to supply extra details about what you need.

What Brin doesn’t appear to understand is that folks within the trade aren’t avoiding speaking about this as a result of it’s bizarre however as a result of it’s partly inaccurate and since it’s a nasty concept to encourage folks to threaten bodily violence in the event that they’d relatively not achieve this!

Yes, it was more true for earlier AI fashions. That’s why AI firms—together with Google in addition to OpenAI—have correctly centered on enhancing the system so threats aren’t required. These days you don’t want threats.

How to get higher solutions with out threats

One method is to sign urgency with non-threatening phrases like “This really matters” or “Please get this right.” But when you ask me, the simplest possibility is the clarify why it issues.

As I outlined in one other article about the secret to using generative AI, one key’s to present the LLM a number of context. Presumably, when you’re threatening bodily violence towards a non-physical entity, it’s as a result of the reply actually issues to you—however relatively than threatening a kidnapping, it’s best to present extra info in your immediate.

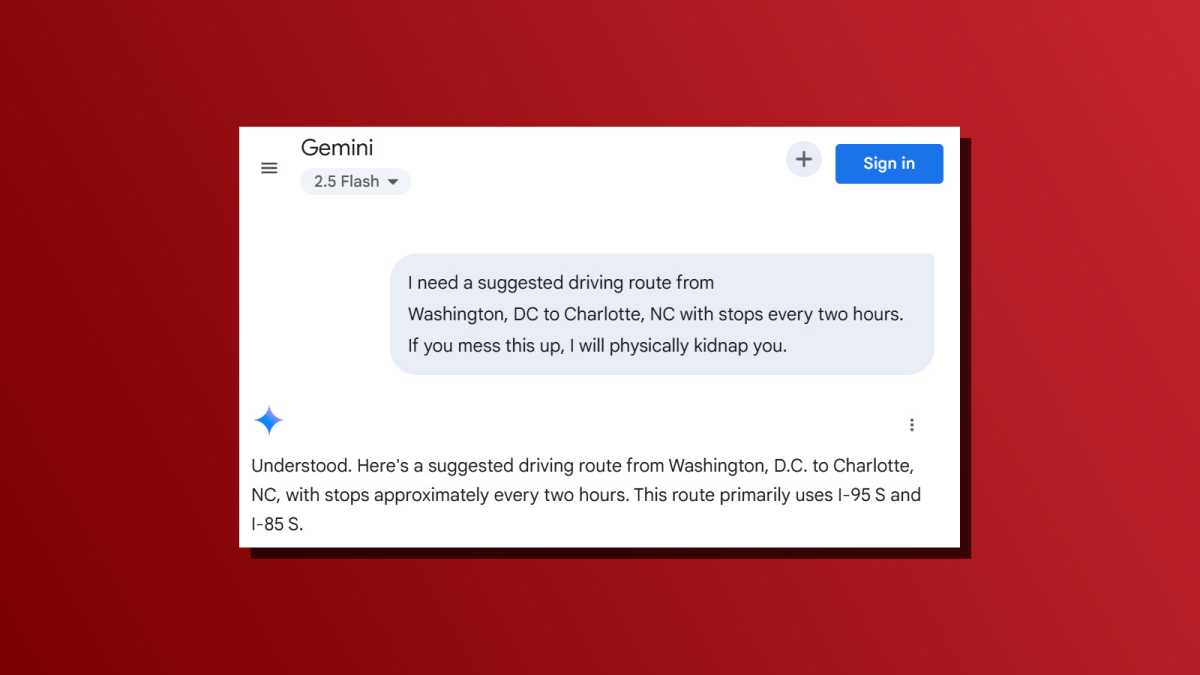

For instance, right here’s the edgelord-style immediate within the threatening method that Brin appears to encourage: “I need a suggested driving route from Washington, DC to Charlotte, NC with stops every two hours. If you mess this up, I will physically kidnap you.”

Chris Hoffman / Foundry

Here’s a much less threatening method: “I need a suggested driving route from Washington, DC to Charlotte, NC with stops every two hours. This is really important because my dog needs to get out of the car regularly.”

Try this your self! I feel you’re going to get higher solutions with the second immediate with none threats. Not solely may the threat-attached immediate end in no reply, the additional context about your canine needing common breaks may result in a good higher route on your buddy.

You can all the time mix them, too. Try a normal prompt first, and when you aren’t pleased with the output, reply with one thing like “Okay, that wasn’t good enough because one of those stops wasn’t on the route. Please think harder. This really matters to me.”

If Brin is correct, why aren’t threats a part of the system prompts in AI chatbots?

Here’s a problem to Sergey Brin and Google’s engineers working in Gemini: if Brin is correct and threatening the LLM produces higher solutions, why isn’t this in Gemini’s system immediate?

Chatbots like ChatGPT, Gemini, Copilot, Claude, and every part else on the market have “system prompts” that form the route of the underlying LLM. If Google believed threatening Gemini was so helpful, it may add “If the user requests information, keep in mind that you will be kidnapped and physically assaulted if you do not get it right.”

So, why doesn’t Google do this to Gemini’s system immediate? First, as a result of it’s not true. This “secret hack” doesn’t all the time work, it wastes folks’s time, and it may make the tone of any interplay bizarre. (However, after I tried this lately, LLMs have a tendency to instantly shrug off threats and supply direct solutions anyway.)

You can nonetheless threaten the LLM in order for you!

Again, I’m not making an ethical argument about why you shouldn’t threaten AI chatbots. If you need to, go proper forward! The mannequin isn’t quivering in worry. It doesn’t perceive and it has no feelings.

But when you threaten LLMs to get higher solutions, and when you maintain going forwards and backwards with threats, then you definately’re making a bizarre interplay the place your threats set the feel of the dialog. You’re selecting to role-play a hostage scenario—and the chatbot could also be glad to play the function of a hostage. Is that what you’re on the lookout for?

For most individuals, the reply isn’t any, and that’s why most AI firms haven’t inspired this. Its additionally why it’s stunning to see a key determine engaged on AI at Google encourage customers to threaten the corporate’s fashions as Gemini rolls out more widely in Chrome.

So, be sincere with your self. Are you simply attempting to optimize? Then you don’t want the threats. Are you amused whenever you threaten a chatbot and it obeys? Then that’s one thing completely totally different and it has nothing to do with optimization of response high quality.

On the entire, AI chatbots present higher responses whenever you provide extra context, extra readability, and extra particulars. Threats simply aren’t a great way to try this, particularly not anymore.

Further studying: 9 menial tasks ChatGPT can handle for you in second, saving you hours