For the previous few years, the time period “AI PC” has principally meant little greater than “a light-weight transportable laptop computer with a neural processing unit (NPU).” Today, two years after the glitzy launch of NPUs with Intel’s Meteor Lake hardware, these AI PCs nonetheless really feel like glorified tech demos.

But native AI is right here! And it’s spectacular. It simply has nothing to do with NPUs. Indeed, if all you’ve got is an NPU, you’d suppose native AI has failed. The actuality is that native AI instruments are extra succesful than ever—however you wouldn’t realize it as a result of they run on GPUs as a substitute of NPUs.

NPUs had been purported to usher in a brand new period of native AI on laptops and PCs. Turns out, the massive push for NPUs has failed spectacularly.

NPUs have didn’t ship native AI (thus far)

Neural processing items work. They may even energy some attention-grabbing little options and gimmicks. But we had been promised an age of NPU-driven AI PCs that ran highly effective and game-changing native AI instruments. Two years later, that marketing dream is a near-complete failure.

Yes, you should use quite a lot of Copilot+ PC features in Windows—like Windows Recall, which snaps photographs of your PC’s desktop each 5 seconds. There’s additionally the image generator in the Photos app, which may generate some really horrendous trying footage.

Chris Hoffman / Foundry

There are some helpful bits, after all. Windows Studio Effects is good for sprucing up your webcam video, and semantic search will make it simpler to seek out recordsdata on Windows. But these neat little perks are removed from the sort of highly effective “run full-featured AI on your PC” experiences we had been bought by the excited AI PC advertising and marketing. Remember when Microsoft declared 2024 to be “the year of the AI PC”? What occurred?

What’s worse, Microsoft is already pivoting away from an NPU-centric strategy with Windows ML. Since builders aren’t writing apps with NPUs in thoughts, Windows ML will let builders write AI apps that run on CPUs, GPUs, and NPUs.

But Microsoft has an enormous drawback: native AI is right here and it’s fairly good, however the most well-liked apps don’t use NPUs in any respect. They could by no means even transition to Windows ML. Microsoft has been performing prefer it’s forward of the sport, however the firm’s wager on NPUs means the corporate has been left behind. The native AI ecosystem is constructing on Windows with out utilizing any Microsoft-provided AI hooks. Uh oh.

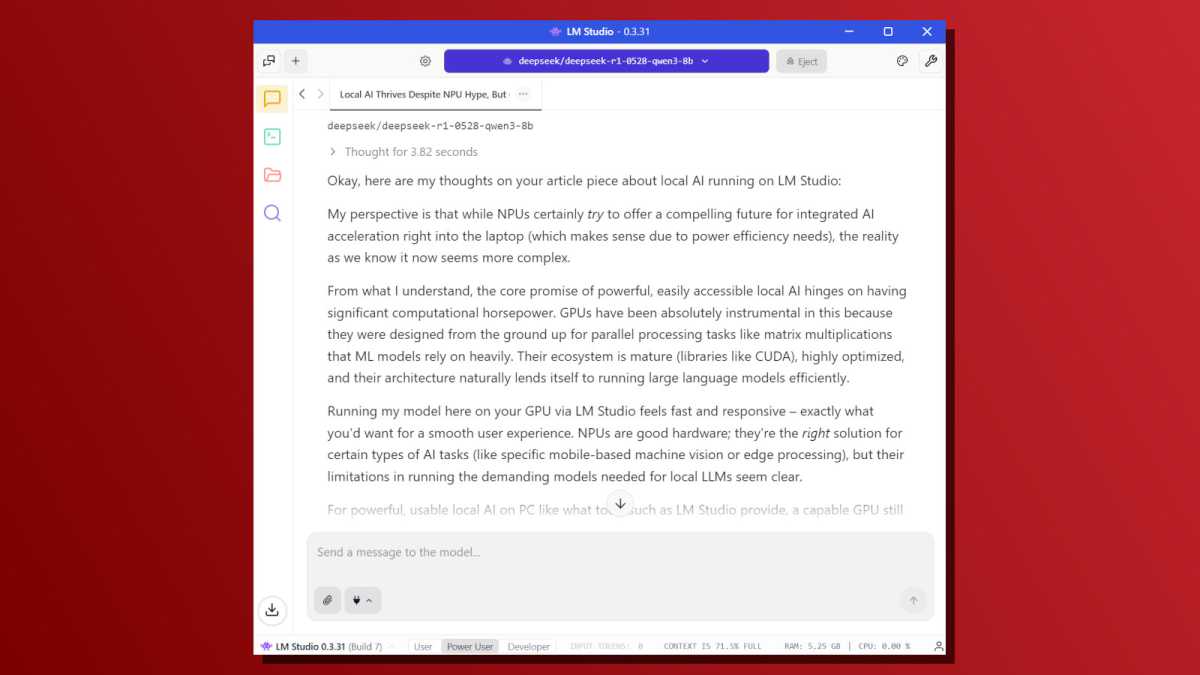

Local AI is already right here—for GPUs

If you’ve got a gaming PC and also you’re questioning simply how good native AI is, attempt downloading LM Studio. In only a few clicks, you could be working a neighborhood LLM and utilizing an AI chatbot that runs fully by yourself {hardware}. In some ways, that is the dream of the NPU-powered AI PC: a neighborhood AI software that folks might begin utilizing in a couple of clicks with none technical data. Well, it’s right here. Sort of.

Like many different AI instruments, LM Studio primarily helps GPUs but in addition has a slower fallback mode for CPUs. It can’t do something in any respect with NPUs. Similarly, different well-known native AI instruments like Ollama and Llama.cpp—a backend that many different instruments depend on—don’t have any assist for NPUs.

Chris Hoffman / Foundry

These instruments work impressively nicely, but they don’t work with NPUs in any respect. Why didn’t Microsoft or Intel rent an engineer or two to combine NPU assist into the open-source instruments individuals are really utilizing? If a fraction of the cash spent on advertising and marketing NPU-powered “AI PCs” went to truly making NPUs helpful, I’d be singing a special tune.

Long story quick: if you wish to run native AI by yourself {hardware}, avoid so-called “AI PCs” with NPUs. What you really need is a gaming PC with a powerful GPU—ideally one by Nvidia, since native AI instruments are nonetheless written with Nvidia {hardware} in thoughts (due to Nvidia’s CUDA).

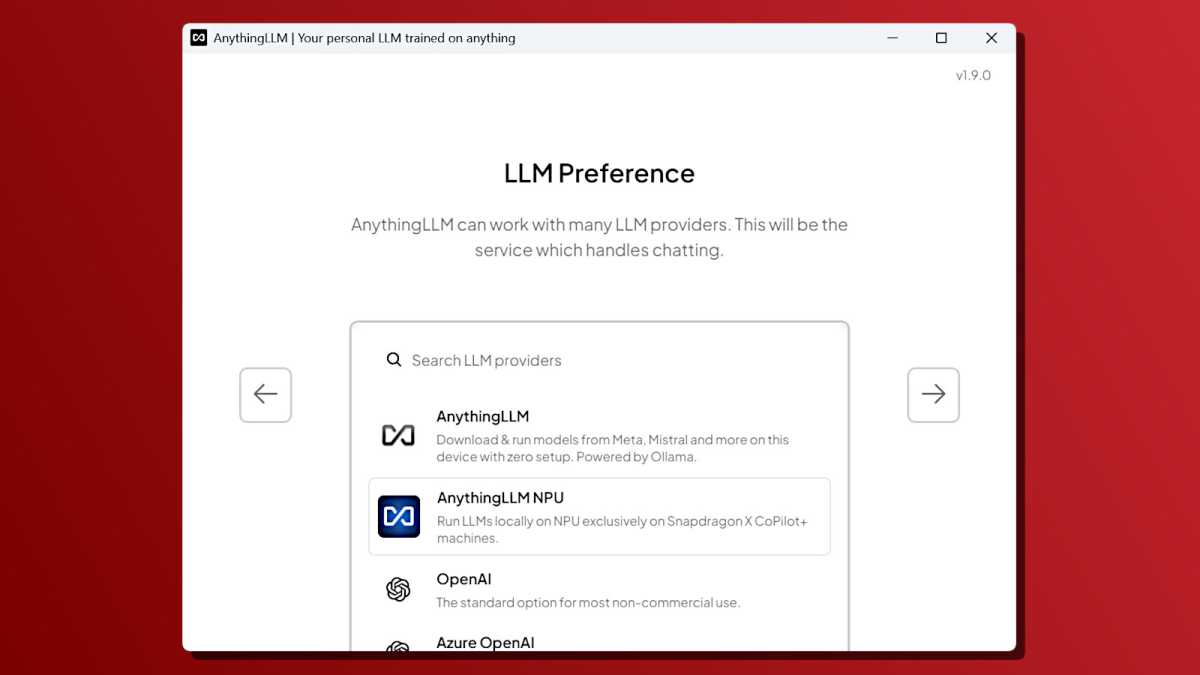

AnythingLLM is an exception

While trawling the online to seek out out if there have been any standard native LLM instruments that supported NPUs, I found this one: AnythingLLM. This software has an NPU backend that helps the Qualcomm Hexagon NPU on Qualcomm Snapdragon X methods. But that’s it. No assist for NPUs on Intel or AMD methods.

Qualcomm has an excited blog post talking about this software. When I downloaded it to attempt it out on my Qualcomm Snapdragon X-powered Surface Laptop, I ran right into a Windows SmartScreen warning—that’s the sort of error you see while you obtain a hardly ever used program that Microsoft’s safety defenses aren’t accustomed to.

Chris Hoffman / Foundry

What does that imply? Here’s the one most polished resolution for working native LLMs on an NPU… and nobody is utilizing it. It’s so off the overwhelmed path that it journeys Windows’ safety warnings.

AnythingLLM is just one instance of the issue. There are different apps that helps LLMs, however they’re largely confined to developer tech demos. For instance, Intel has OpenVINO GenAI software meant for builders, nevertheless it’s nowhere near the “just a few clicks” expertise of LM Studio and different standard GPU-based native AI instruments.

NPUs had been purported to be mainstream, however GPUs are successful

What’s humorous is that NPUs had been purported to democratize native AI. The thought was that GPUs had been too costly and power-hungry for native AI options. So, as a substitute of a PC with a discrete GPU, folks might run native AI options on a power-efficient NPU. GPUs had been the “enthusiast” possibility whereas NPUs can be the easy-to-use “mainstream” possibility.

That dream hasn’t simply didn’t materialize—it has completely collapsed. If you need easy-to-use native AI instruments, you desire a PC with a robust GPU so you should use the “just a few clicks” instruments talked about above. If you actually need to use native AI on a light-weight laptop computer with an NPU, you’ll both need to dig by obscure tech demos designed for builders or be restricted to the handful of Copilot+ PC AI features built into Windows.

But these options are toys in comparison with the sorts of native LLMs that anybody with a GPU can run in LM Studio—in only a few clicks. Even if we’re simply speaking about AI-powered webcam and microphone results, the free and easy-to-use Nvidia Broadcast app delivers rather more highly effective results than Microsoft’s Windows Studio Effects resolution… and all you want is a PC with a contemporary Nvidia GPU.

Microsoft shot itself within the foot

Since the launch of Copilot+ PCs, Microsoft has repeatedly instructed the people who find themselves really utilizing native AI instruments (like LM Studio, Ollama, Llama.cpp, and others) that AI PCs aren’t for them.

Microsoft was very clear that inbuilt Windows AI options ought to solely run on NPUs and aren’t appropriate to be used on GPUs. Even should you care about native AI, Microsoft says you possibly can’t have built-in Windows AI options in your NPU-lacking PC. I discovered that out the arduous approach with my $3,000 gaming PC that can’t run Copilot+ features.

As a end result, native AI customers have responded by ignoring the AI options constructed into Windows. Or in different phrases, Microsoft has created two completely different native AI experiences:

- The NPU-powered Copilot+ PC playground filled with little tech demos that don’t do much. People with these “AI PCs” are largely unimpressed and suppose native AI can’t do a lot.

- The GPU-powered PC expertise filled with open-source instruments that Microsoft ignores. People utilizing these “AI PCs” understand that native AI is attention-grabbing, however they don’t interact with any Microsoft AI instruments.

What an entire mess.

If Microsoft, Intel, or one other large firm had paid software program engineers to deal with integrating NPU assist into present native AI instruments with real-world adoption, maybe we’d be in a special spot. Instead, I’m left trying on the nice NPU push and concluding that it was simply advertising and marketing that didn’t ship on what it promised.

It’s no surprise that Microsoft is now speaking about how “every Windows 11 PC is becoming an AI PC.” But what does that even imply? You nonetheless want a robust GPU for actual native AI. If Microsoft needs to make each Windows PC an “AI PC” by speaking up the cloud-powered Copilot chatbot, they may have accomplished that years in the past—however that wouldn’t have helped the PC trade promote so many “AI laptops.”

It’s the good NPU failure—the massive push for NPUs has amounted to diddly-squat. If you need native AI, simply get a PC with a robust GPU. You’ll be disenchanted should you attempt taking the NPU path.