Kyle Dent is a Research Area Manager for PARC, a Xerox Company, targeted on the interaction between folks and know-how. He additionally leads the ethics assessment committee at PARC.

Artificial intelligence is now getting used to make choices about lives, livelihoods, and interactions in the true world in ways in which pose actual dangers to folks.

We have been all skeptics as soon as. Not that way back, typical knowledge held that machine intelligence confirmed nice promise, however it was all the time only a few years away. Today there may be absolute religion that the long run has arrived.

It’s not that stunning with vehicles that (typically and underneath sure situations) drive themselves and software program that beats people at video games like chess and Go. You can’t blame folks for being impressed.

But board video games, even difficult ones, are a far cry from the messiness and uncertainty of real-life, and autonomous vehicles nonetheless aren’t truly sharing the street with us (at the very least not with out some catastrophic failures).

AI is being utilized in a stunning variety of purposes, making judgments about job efficiency, hiring, loans, and prison justice amongst many others. Most individuals are not conscious of the potential dangers in these judgments. They ought to be. There is a common feeling that know-how is inherently impartial — even amongst a lot of these creating AI options. But AI builders make choices and select tradeoffs that affect outcomes. Developers are embedding moral decisions inside the know-how however with out desirous about their choices in these phrases.

These tradeoffs are normally technical and delicate, and the downstream implications usually are not all the time apparent on the level the choices are made.

The deadly Uber accident in Tempe, Arizona, is a (not-subtle) however good illustrative instance that makes it simple to see the way it occurs.

The autonomous car system truly detected the pedestrian in time to cease however the builders had tweaked the emergency braking system in favor of not braking an excessive amount of, balancing a tradeoff between jerky driving and security. The Uber builders opted for the extra commercially viable alternative. Eventually autonomous driving know-how will enhance to a degree that enables for each security and clean driving, however will we put autonomous vehicles on the street earlier than that occurs? Profit pursuits are pushing arduous to get them on the street instantly.

Physical dangers pose an apparent hazard, however there was actual hurt from automated decision-making techniques as properly. AI does, in reality, have the potential to benefit the world. Ideally, we mitigate for the downsides with the intention to get the benefits with minimal hurt.

A significant threat is that we advance using AI know-how at the price of lowering particular person human rights. We’re already seeing that occur. One essential instance is that the fitting to enchantment judicial choices is weakened when AI instruments are concerned. In many different instances, people don’t even know {that a} alternative to not rent, promote, or lengthen a mortgage to them was knowledgeable by a statistical algorithm.

Buyer Beware

Buyers of the know-how are at a drawback after they know a lot much less about it than the sellers do. For essentially the most half choice makers usually are not outfitted to judge clever techniques. In financial phrases, there may be an data asymmetry that places AI builders in a extra highly effective place over those that may use it. (Side word: the topics of AI choices usually haven’t any energy in any respect.) The nature of AI is that you just belief (or not) the choices it makes. You can’t ask know-how why it determined one thing or if it thought of different options or recommend hypotheticals to discover variations on the query you requested. Given the present belief in know-how, distributors’ guarantees a few cheaper and quicker strategy to get the job accomplished will be very attractive.

So far, we as a society haven’t had a strategy to assess the worth of algorithms in opposition to the prices they impose on society. There has been little or no public dialogue even when authorities entities determine to undertake new AI options. Worse than that, details about the information used for coaching the system plus its weighting schemes, mannequin choice, and different decisions distributors make whereas creating the software program are deemed commerce secrets and techniques and subsequently not obtainable for dialogue.

Image through Getty Images / sorbetto

The Yale Journal of Law and Technology printed a paper by Robert Brauneis and Ellen P. Goodman the place they describe their efforts to check the transparency round authorities adoption of information analytics instruments for predictive algorithms. They filed forty-two open data requests to numerous public companies about their use of decision-making help instruments.

Their “specific goal was to assess whether open records processes would enable citizens to discover what policy judgments these algorithms embody and to evaluate their utility and fairness”. Nearly the entire companies concerned have been both unwilling or unable to offer data that might result in an understanding of how the algorithms labored to determine residents’ fates. Government record-keeping was one of many greatest issues, however corporations’ aggressive commerce secret and confidentiality claims have been additionally a significant issue.

Using data-driven threat evaluation instruments will be helpful particularly in instances figuring out low-risk people who can benefit from decreased jail sentences. Reduced or waived sentences alleviate stresses on the jail system and benefit the people, their households, and communities as properly. Despite the doable upsides, if these instruments intrude with Constitutional rights to due course of, they don’t seem to be well worth the threat.

All of us have the fitting to query the accuracy and relevance of knowledge utilized in judicial proceedings and in lots of different conditions as properly. Unfortunately for the residents of Wisconsin, the argument that an organization’s profit curiosity outweighs a defendant’s proper to due course of was affirmed by that state’s supreme court docket in 2016.

Fairness is within the Eye of the Beholder

Of course, human judgment is biased too. Indeed, skilled cultures have needed to evolve to handle it. Judges for instance, attempt to separate their prejudices from their judgments, and there are processes to problem the equity of judicial choices.

In the United States, the 1968 Fair Housing Act was handed to make sure that real-estate professionals conduct their enterprise with out discriminating in opposition to shoppers. Technology corporations do not need such a tradition. Recent information has proven simply the other. For particular person AI builders, the main target is on getting the algorithms right with excessive accuracy for no matter definition of accuracy they assume of their modeling.

I just lately listened to a podcast the place the dialog puzzled whether or not speak about bias in AI wasn’t holding machines to a different normal than people—seeming to recommend that machines have been being put at a drawback in some imagined competitors with people.

As true know-how believers, the host and visitor finally concluded that after AI researchers have solved the machine bias drawback, we’ll have a brand new, even higher normal for people to stay as much as, and at that time the machines can educate people easy methods to keep away from bias. The implication is that there’s an goal reply on the market, and whereas we people have struggled to find it, the machines can present us the best way. The reality is that in lots of instances there are contradictory notions about what it means to be truthful.

A handful of analysis papers have come out prior to now couple of years that sort out the query of equity from a statistical and mathematical point-of-view. One of the papers, for instance, formalizes some fundamental standards to find out if a call is truthful.

In their formalization, in most conditions, differing concepts about what it means to be truthful usually are not simply different however truly incompatible. A single goal resolution that may be known as truthful merely doesn’t exist, making it unimaginable for statistically educated machines to reply these questions. Considered on this mild, a dialog about machines giving human beings classes in equity sounds extra like theater of the absurd than a purported considerate dialog in regards to the points concerned.

Image courtesy of TechSwitch/Bryce Durbin

When there are questions of bias, a dialogue is critical. What it means to be truthful in contexts like prison sentencing, granting loans, job and faculty alternatives, for instance, haven’t been settled and sadly include political parts. We’re being requested to hitch in an phantasm that artificial intelligence can by some means de-politicize these points. The reality is, the know-how embodies a specific stance, however we don’t know what it’s.

Technologists with their heads down targeted on algorithms are figuring out essential structural points and making coverage decisions. This removes the collective dialog and cuts off enter from different points-of-view. Sociologists, historians, political scientists, and above all stakeholders inside the neighborhood would have loads to contribute to the controversy. Applying AI for these difficult issues paints a veneer of science that tries to dole out apolitical options to difficult questions.

Who Will Watch the (AI) Watchers?

One main driver of the present pattern to undertake AI options is that the destructive externalities from using AI usually are not borne by the businesses creating it. Typically, we deal with this example with authorities regulation. Industrial air pollution, for instance, is restricted as a result of it creates a future price to society. We additionally use regulation to guard people in conditions the place they could come to hurt.

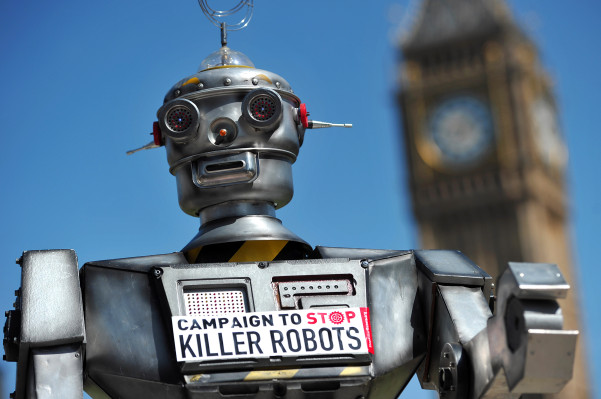

Both of those potential destructive penalties exist in our present makes use of of AI. For self-driving vehicles, there are already regulatory our bodies concerned, so we will count on a public dialog about when and in what methods AI pushed automobiles can be utilized. What in regards to the different makes use of of AI? Currently, aside from some motion by New York City, there may be precisely zero regulation round using AI. The most simple assurances of algorithmic accountability usually are not assured for both customers of know-how or the topics of automated choice making.

Image through Getty Images / nadia_bormotova

Unfortunately, we will’t go away it to corporations to police themselves. Facebook’s slogan, “Move fast and break things” has been retired, however the mindset and the tradition persist all through Silicon Valley. An angle of doing what you assume is greatest and apologizing later continues to dominate.

This has apparently been effective when constructing techniques to upsell shoppers or join riders with drivers. It turns into utterly unacceptable if you make choices affecting folks’s lives. Even if well-intentioned, the researchers and builders writing the code don’t have the coaching or, on the threat of offending some fantastic colleagues, the inclination to consider these points.

I’ve seen firsthand too many researchers who exhibit a stunning nonchalance in regards to the human influence. I just lately attended an innovation convention simply outdoors of Silicon Valley. One of the shows included a doctored video of a really well-known individual delivering a speech that by no means truly befell. The manipulation of the video was utterly imperceptible.

When the researcher was requested in regards to the implications of misleading know-how, she was dismissive of the query. Her reply was basically, “I make the technology and then leave those questions to the social scientists to work out.” This is simply one of many worst examples I’ve seen from many researchers who don’t have these points on their radars. I suppose that requiring laptop scientists to double main in ethical philosophy isn’t sensible, however the lack of concern is placing.

Recently we realized that Amazon deserted an in-house know-how that that they had been testing to pick out one of the best resumes from amongst their candidates. Amazon found that the system they created developed a desire for male candidates, in effect, penalizing girls who utilized. In this case, Amazon was sufficiently motivated to make sure their very own know-how was working as effectively as doable, however will different corporations be as vigilant?

As a matter of reality, Reuters studies that different corporations are blithely transferring forward with AI for hiring. A 3rd-party vendor promoting such know-how truly has no incentive to check that it’s not biased until prospects demand it, and as I discussed, choice makers are principally not able to have that dialog. Again, human bias performs a component in hiring too. But corporations can and may cope with that.

With machine studying, they will’t ensure what discriminatory options the system may study. Absent the market forces, until corporations are compelled to be clear in regards to the improvement and their use of opaque know-how in domains the place equity issues, it’s not going to occur.

Accountability and transparency are paramount to securely utilizing AI in real-world purposes. Regulations might require entry to fundamental details about the know-how. Since no resolution is totally correct, the regulation ought to permit adopters to know the effects of errors. Are errors comparatively minor or main? Uber’s use of AI killed a pedestrian. How dangerous is the worst-case state of affairs in different purposes? How are algorithms educated? What information was used for coaching and the way was it assessed to find out its fitness for the supposed function? Does it really symbolize the folks into account? Does it include biases? Only by accessing this sort of data can stakeholders make knowledgeable choices about acceptable dangers and tradeoffs.

At this level, we would should face the truth that our present makes use of of AI are getting forward of its capabilities and that utilizing it safely requires much more thought than it’s getting now.